Category: Programming

-

Migrating from Octopress to Jekyll

Back in 2014 I abandoned WordPress for Octopress. It’s been especially amazing for page load speeds, and I also enjoyed the fact that GitHub Pages are completely free - and I only need to pay for a domain name. Hosting a website can get expensive.

Octopress was a shortlived framework built on top of Jekyll, focused on blogging and designed to run on top of GitHub Pages. Unfortunately the development stopped in 2015, and now, 10 years later, I couldn’t set it up on a new machine due to most dependencies getting dangerously out of date.

I chose to migrate to vanilla Jekyll, since it’s a static site generator which is built on top of simple markdown and HTML files. Jekyll’s been around for some time, and I’m hoping Microsoft won’t be shutting down GitHub pages any time soon.

The whole process only took a couple of hours, and I’d like to document some highlights and lowlights. You might find it useful if you’re setting up a new Jekyll blog, or, like me, still have an Octopress blog that needs migrating.

Fresh setup

I went with a fresh Jekyll setup, by installing Jekyll and running

jekyll new blog. I successfully copied over old_postsandimages, and ported the relevant parts of_config.ymlfrom Octopress to vanilla Jekyll.Octopress uses liquid

{% img %}tags, which aren’t natively supported in Jekyll. I took the opportunity to convert those to markdown style syntax. I only have a few hundred posts, and I used a Vim macro to convert all{% img /foo/bar.png baz %}to.By default Jekyll comes installed with the

minimatheme, which I found to be mostly sufficient for my needs. I was able to override specific theme files by copying them from gem installation location to my blog directory and modifying them. Turned out to be straightforward and customizable. For example, I transferred the way Octopress pagination looks by modifying_layouts/home.html.For backward compatbility, I also had to move RSS feed to

/atom.xmlby modifying_config.yml:feed: path: /atom.xmlI could immediately run the site locally with

bundle exec jekyll serve --baseurl="".Missing functionality

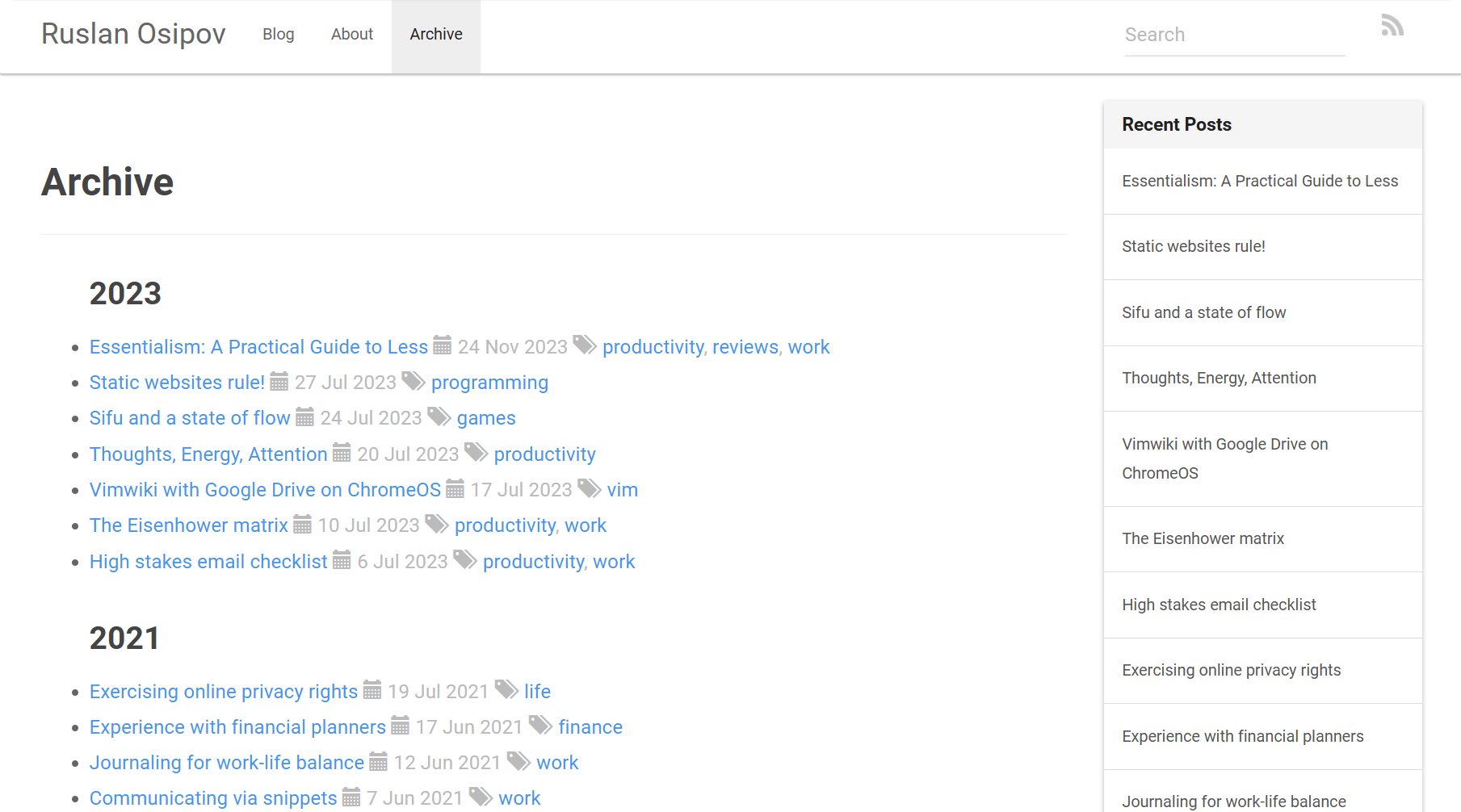

Two major things were missing straight out of the box: archive and category pages.

I grew attached to my archive page, and recreating it only took a couple of minutes. All I had to do is add an

archive.markdownpage to the site’s root directory:--- layout: page title: Archive navbar: Archive permalink: /blog/archive/ --- {%- assign date_format = site.minima.date_format | default: "%b %-d, %Y" -%} <div> <ul> {% for post in site.posts %} {% capture this_year %}{{ post.date | date: "%Y" }}{% endcapture %} {% unless year == this_year %} {% assign year = this_year %} <h2 style="margin-top: 1em;">{{ year }}</h2> {% endunless %} <li> <a href="{{ root_url }}{{ post.url }}" itemprop="url">{{ post.title }}</a> <span class="text-muted">| 📅 {{ post.date | date: date_format }}</span> </li> {% endfor %} </ul> </div>Building category support turned out to be messier and more complicated. I didn’t want to write up a custom solution, and ended up with some technical debt I’ll probably have to address in the future (wink-wink, this will never happen).

I used

jekyll-category-pagesgem, which worked okay-ish. The instructions on field-theory/jekyll-category-pages are extensive and aren’t too difficult to follow - I appreciated not having to write my own category pages, but I had to:- Stop category pages from being automatically added to the navigation bar.

- Disable pagination on category pages, because for some reason it really didn’t work with

jekyll-category-pages.

I also added my own basic category index pages by creating

categories.markdown:--- layout: page title: Categories navbar: Categories permalink: /blog/categories/ --- {% assign category_names = "" | split: "" %} {% for category in site.categories %} {% assign category_names = category_names | push: category[0] %} {% endfor %} {% assign category_names = category_names | sort %} <div> <ul> {% for category in category_names %} <li> <a href="{{ root_url }}/{{ site.category_path }}/{{ category | slugify }}">{{ category }}</a> </li> {% endfor %} </ul> </div>GitHub Pages

While GitHub Pages documentation is extensive, getting Jekyll to work with GitHub Pages took longer than I’d like to admit. Specifically,

Gemfilegenerated by runningjekyll new blogmisleadingly tells you to comment away the latest version of thejekyllgem and instead use thegithub-pagesgem:# Happy Jekylling! gem "jekyll", "~> 4.4.1" # If you want to use GitHub Pages, remove the "gem "jekyll"" above and # uncomment the line below. To upgrade, run `bundle update github-pages`. # gem "github-pages", group: :jekyll_pluginsYou don’t want to do that, oh no. Because the default GitHub Pages gem is stuck in the past on the 3rd version of Jekyll (and at the time of writing we’re on version 4), which caused all kind of hidden problems - including the fact that my URL slugs silently weren’t getting generated right. I switched back on the

jekyllgem and set up a custom GitHub action to deploy the site:name: Deploy Jekyll site to Pages on: push: branches: ["master"] # Allows you to run this workflow manually from the Actions tab workflow_dispatch: permissions: contents: read pages: write id-token: write concurrency: group: "pages" cancel-in-progress: false jobs: # Build job build: runs-on: ubuntu-latest steps: - name: Checkout uses: actions/checkout@v4 - name: Setup Ruby # https://github.com/ruby/setup-ruby/releases/tag/v1.207.0 uses: ruby/setup-ruby@4a9ddd6f338a97768b8006bf671dfbad383215f4 with: ruby-version: '3.1' # Not needed with a .ruby-version file bundler-cache: true # runs 'bundle install' and caches installed gems automatically cache-version: 0 # Increment this number if you need to re-download cached gems - name: Setup Pages id: pages uses: actions/configure-pages@v5 - name: Build with Jekyll run: bundle exec jekyll build --baseurl "$" env: JEKYLL_ENV: production - name: Upload artifact uses: actions/upload-pages-artifact@v3 # Deployment job deploy: environment: name: github-pages url: $ runs-on: ubuntu-latest needs: build steps: - name: Deploy to GitHub Pages id: deployment uses: actions/deploy-pages@v4Don’t forget to set “Build and deployment source” to “GitHub pages” in the repository settings to actually use the action.

My Octopress blog was set up in a

sourceGit branch, and content was generated into themasterbranch. I wanted to change that to have the source in the master branch (the action above won’t work without that), and I was able to replace mymasterwithsourcewith the following set of commands:git checkout master git pull git checkout source git merge -s ours master --allow-unrelated-histories git checkout master git merge sourceWe merge the

masterbranch intosourceusingoursmerge strategy (effectively ignoring themasterbranch history), and then merge that back intomaster.Positive experience

All in all migrating to Jekyll has been a great experience, which is a testament to Jekyll community’s dedication to thorough documentation. Knowing that Jekyll is a mature, maintained, and documented project, and that GitHub Pages infrastructure is reliable and supported, provides a sense of stability. I hope this results in Jekyll and GitHub Pages becoming a (reasonably) future-proof platform for my blog. But let’s check back in in 10 years - see you in 2035?

-

Static websites rule!

I hope you’ve noticed that navigating to this page was quick (let’s hope that the Internet Gods are kind to me, and nothing stood in the way of you accessing this page). In fact, most pages on my blog - hopefully including this one - should render in under a second. I didn’t put any work into optimizing this site, and it’s not a boast, nor is it a technological marvel - this is just a good old fashioned static website.

If this is new to you - static website is just what it sounds like - static HTML and CSS files, sometimes with some light JavaScript sprinkled throughout. There’s no server side processing – the only bottlenecks are the host server speed, recipient’s connection speed, and the browser rendering speed. Page is stored as is, and is sent over as soon as it’s requested. This is how the Internet used to be in late 90s and early 2000s (with eclectic web design to boot, of course).

I think static websites are cool and aren’t used nearly enough, especially for websites that are, well, static. Think to the last website you’ve visited to read something - maybe a news site, or maybe a blog. Now did it take at least a couple of seconds for them to load? Likely. Did their server have to waste unnecessary cycles putting together a page for you? Most definitely. Now, contrast this with your experience with a static website like this one. Here’s the result from pagespeed.web.dev for this page:

Every render complete in under a second, and I didn’t have to put in any work into optimizing my website.

This site is built on a (now unsupported) Octopress, which is itself built on top of Jekyll. You write pages in Markdown, generate web pages using a pre-made template, and deploy the resulting pages to a hosting provider. In fact, GitHub Pages allow you to host your static website for free, and you can have a third party platform like Disqus provide comment support.

Static websites work great for portfolios, blogs, and websites that don’t rely on extensive common manipulation. They’re more secure (no backend to hack), simple to build and maintain, very fast even without optimization, and are natively SEO friendly (search engines are great at understanding static pages). Static websites are cheap to run - I only pay for a domain name for this site (under $20 a year).

If you have a blog or a portfolio and you’re using an overly complicated content management system to write - consider slimming down. Jekyll (or many of its alternatives) offers a number of pre-made off-ramps for major CMS users, is easy to set up, and is straightforward to work with. Can’t recommend enough - static websites rule!

-

Making a packing spreadsheet

Being the unique person I am, I love traveling. Oftentimes I end up getting deep into trying to optimize my packing methods. There are dozens of tools online designed to help with this exact thing (services like Lighterpack or GearGrams). But, being handy with code, I decided to dabble in the subject on my own.

One of the most important things in packing is the overall weight of the pack, and I always want to know what type of things are the heaviest. I also want to be able to run random queries on my data, whatever it is that I’m trying to learn. I want to have an inventory of items (backpacks, clothes, storage solutions) which I can plug in and out of a spreadsheet to see how the resulting picture changes on the go. Working with software as my day job, I’d also like for the solution to be automated whenever possible.

Google Spreadsheets turned out to be the perfect solution, providing the ability to quickly sum up the weight of my things and output insights about the data.

Final Result

Here’s a link to the spreadsheet, I encourage the reader to copy and play around with in anyway you see fit.

Here’s the final result for a multi-day trip I will have for this year. As you can see, my pack weighs around 3 kilograms or a bit over 6 freedom units (not including water). My recently purchased Nintendo Switch is the heaviest item (and it’s worth every gram as it makes flying tolerable), but clothes take up most of the weight:

I use indentation levels to show that some items are contained within other items. This also lets me calculate the absolute and relative weights of a whole container with everything inside of it (see fields labeled “Container” and “Percentage”).

The “Weight” and the “Breakdown” fields indicate absolute and relative item weight respectively, which accounts for quantity of the item (quantity defaults to 1 if not explicitly set). Weight-related fields are color coded from lightest items in green to heaviest items in red.

Categories are used to group items and execute queries on the data and learn insights like these:

There’s a separate sheet where I enter item names, categories, and weights, which I then use to automatically populate values above and autofill item names in the primary sheet:

The Technical Details

I haven’t worked with Google Spreadsheets (or Excel for that matter) a lot in the past, but with an access to clear documentation (and a hundred of web searches later) it was straightforward to get the hang of the it.

I started off by manually populating the final result sheet, manually adjusting formulas for Container/Percentage cells, as I had no idea how I would detect the indentation yet. I like when things look pretty, so I applied conditional formatting rules right away, and the looks of the sheet really haven’t changed throughout the process.

Next, I created an inventory sheet, which I wanted to use as a source of data in the resulting sheet. A few Google searches and some trial & error resulted in a lookup formula:

=ArrayFormula( IF( NOT(ISBLANK($B2)), INDEX(InventoryCategories, MATCH($B2, InventoryItems, 0)), IF( NOT(ISBLANK($C2)), INDEX(InventoryCategories, MATCH($C2, InventoryItems, 0)), INDEX(InventoryCategories, MATCH($D2, InventoryItems, 0)) ) ) )ArrayFormulais necessary in order to create arrays on the fly without printing intermediate results in the spreadsheet.InventoryItemsandInventoryCategoriesare named ranges referring to item names and categories in the inventory sheet.MATCHfinds an index of a first occurrence of the item name in the sheet, and retrieves corresponding category name. An item weight is retrieved by the exact same principle.Trying to find the container weight took a lot more time, and resulted in a lot more headache. Solution for handling indentation in Google Spreadsheets wasn’t as elegant as I would have hoped for, but it got the job done:

=ArrayFormula( SUM( $I2:INDIRECT( CONCATENATE( "$I", ROW() + IF( NOT(ISBLANK($B2)), MATCH(FALSE, ISBLANK($B3:$B), 0), MATCH(FALSE, ISBLANK($C3:$C), 0) ) - 1 ) ) )The formula above finds the first non-empty cell in a column. It starts searching from the next row (for example, for an item on a second row, we look at the third row and below). After it knows which row first non-empty cell is in, the formula turns it into a string (column $I contains item weights) and use it as an upper bound of a sum of the weights. Finished formula is a bit longer (it adds sugar to only display the number when needed), and if you’re interested - you can look it up in the spreadsheet.

For example, in the screenshot above, the formula will start looking at cells in a column right after the “Packing cube”. As soon as it finds a non-empty cell (“Nintendo Switch case”), the formula will determine this row to be an upper boundary. The formula then will sum weights starting with a “Packing cube” row, and up to but not including “Nintendo Switch case” row.

The rest involved many small tweaks, adding pretty colors, hiding N/A errors and zeroes, and trying to find the perfect shade for column borders.

And, since you made it this far, here’s how the numbers above look in the real world:

Hopefully you found this useful, or at least somewhat entertaining. There’s a lot of room for improvement, and I aimed to provide a framework and a few basic ideas for building a spreadsheet to accommodate one’s twisted ultralight needs.

-

Automating Octorpress publishing

I really like Octopress. It builds on top of Jekyll to address certain rough edges, and provides ready to go lighting fast blogging platform. It’s easily extendible if you know how to code (thanks to a rather clean and well organized code base), and posts are just plain Markdown files.

One issue though - I need to be near a computer to publish and preview the article. This becomes difficult if I’m traveling with, say, a tablet.

I have a low end AWS Lightsail instance I use for writing and publishing, but it’s not always fun to write when SSHing into a server, and I often write offline - making it even more difficult to move files between where I write and where I publish. And managing images is a nightmare. To resolve this, I set up a few directories I use in Dropbox, and wrote a few scripts to move things around when needed.

Here’s a directory structure in Dropbox:

- blog/ - posts/ - 2017-11-20-automatic-octopress-publishing.markdown - img/ - input/ - a-picture-of-a-flower.jpg - output/I put Markdown files in

Dropbox/blog/posts/(and numerous offline editors sync with Dropbox - I’m writing this with StackEdit, and I use iA Writer on my tablet). I add my images toDropbox/img/input/. I tend to strip metadata from my images and resize them to fit the maximum page width (I don’t really care for high resolution pictures, speed is preferred over ability to zoom into a picture). For this, two tools are needed:sudo apt-get install imagemagick exiftoolWhen I’m done writing or want to preview an article, I SSH into my AWS Lightsail instance and run

sync.sh, a small script which moves posts to a proper directory, processes images and places them in the desired location, as well as starts Octopress instance (this way I can preview my blog on the AWS Lightsail instance). Contents ofsync.sh(don’t forget tochmod +x):#!/bin/bash cd $HOME/Dropbox/blog/img/input mogrify -resize 832x620 -quality 100 -path $HOME/Dropbox/blog/img/output *.jpg exiftool -all= $HOME/Dropbox/blog/img/output/*.jpg cp $HOME/Dropbox/blog/posts/*.markdown $HOME/blog/source/_posts cp $HOME/Dropbox/blog/img/output/*.jpg $HOME/blog/source/images/posts cd $HOME/blog rake generate rake previewI run the above script every time I want to preview the site. I’m sure it’s easy to set up a daemon to watch for changes in the Dropbox folders, but I don’t see the need for that yet. Also, I just statically resize images to a particular resolution (832x620) since all of the pictures I upload have the same aspect ratio, I’m sure there’s a way to calculate that number on the fly for this script to work with different aspect ratios.

Lastly, when I deployed and committed my changes (still commit and deploy manually out of a habit), I run

archive.sh:#!/bin/bash mv $HOME/Dropbox/blog/posts/*.markdown $HOME/Dropbox/blog/published rm $HOME/Dropbox/blog/img/input/* rm $HOME/Dropbox/blog/img/output/*It’s not much, but enough to save some manual labor involved in publishing to Octopress.

-

Mob level distribution

Distributing mobs in a dungeon based on player’s level (or some dungeon level difficulty factor) was somewhat straightforward, but I would still like to document the progress. I needed to place a mob that’s somewhat within the difficulty level I want, plus minus a few difficulty levels to spice it up.

Above you can see three rats, three cats, a dog (

r,c,d, all level 1), a farmer (f, level 2), and a lonely bandit (b, level 3) in a level 1 dungeon.Without going straight into measure theory, I generated intervals for each mob based on the diff of desired level and their level, and then randomly selected a point within the boundaries. Here’s the abstract code:

import bisect import random def get_random_element(data, target, chance): """Get random element from data set skewing towards target. Args: data -- A dictionary with keys as elements and values as weights. Duplicates are allowed. target -- Target weight, results will be skewed towards target weight. chance -- A float 0..1, a factor by which chance of picking adjacent elements decreases (i.e, with chance 0 we will always select target element, with chance 0.5 probability of selecting elements adjacent to target are halved with each step). Returns: A random key from data with distribution respective of the target weight. """ intervals = [] # We insert in order, no overlaps. next_i = 0 for element, v in data.iteritems(): d = max(target, v) - min(target, v) size = 100 while d > 0: # Decrease chunk size for each step of `d`. size *= chance d -= 1 if size == 0: continue size = int(size) intervals.append((next_i, next_i + size, element)) next_i += size + 1 fst, _, _ = zip(*intervals) rnd = random.randint(0, next_i - 1) idx = bisect.bisect(fst, rnd) # This part is O(log n). return intervals[idx - 1][2]Now, if I test the above for, say, a 1000000 iterations, with a chance of 0.5 (halving probability of selecting adjacent elements with each step), and 2 as a target, here’s the distribution I end up with:

target, chance, iterations = 2, 0.5, 1000000 data = collections.OrderedDict([ # Ordered to make histogram prettier. ('A', 0), ('B-0', 1), ('B-1', 1), ('C', 2), ('D', 3), ('E', 4), ('F', 5), ('G', 6), ('H', 7), ('I', 8), ('J', 9), ]) res = collections.OrderedDict([(k, 0) for k, _ in data.iteritems()]) # This is just a test, so there's no need to optimize this for now. for _ in xrange(iterations): res[get_random_element(data, target, chance)] += 1 pyplot.bar( range(len(res)), res.values(), width=0.7, align='center', color='green') pyplot.xticks(range(len(res)), res.keys()) pyplot.title( 'iterations={}, target={}, chance={}'.format( iterations, target, chance)) pyplot.show()

You can see elements B-0 and B-1 having roughly the same distribution, since they have the same weight.

Now, if I decrease the

chance, likelihood of target being selected increases, while likelihood of surrounding elements being selected decreases:

I works the opposite way as well, increasing the

chancedecreases likelihood of the target being selected and increases the probability for surrounding elements.

For the sake of completeness, it works with 0 chance of surrounding elements being picked:

And an equal chance of picking surrounding elements:

After playing around with the configuration in Jupyter Notebook, I cleaned up the algorithm above and included it into mob placement routine.

-

Spawning evenly distributed mobs

After generating a few good looking random dungeons, I was puzzled with randomly placing mobs on resulting maps. To make it fair (and fun) for the player mobs should be evenly distributed.

The obvious idea was to pick coordinates randomly within the rectangular map boundaries, and then place mobs if they have floor underneath them. But this way I lose control of the number of mobs and risk having a chance of not placing any mobs at all. Plus, dungeons with bigger surface area would get more mobs - which sounds somewhat realistic, but not entirely what I was aiming for.

I could improve on the above by rerunning enemy placement multiple times and select the most favorable outcome - but the solution would be rather naive.

To have control over the number of mobs I decided to place them as I generate the rooms of the dungeon. There’s a trick one can use to get a random element with equal probability distribution from a sequence of an unknown size:

import random def get_random_element(sequence): """Select a random element from a sequence of an unknown size.""" selected = None for k, element in enumerate(sequence): if random.randint(0, k) == 0: selected = element return selectedWith each iteration the chance of the current element to become a selected item is 1 divided by number of elements seen so far. Indeed, a probability of an element being selected out of a 4-item sequence:

1 * (1 - 1/2) * (1 - 1/3) * (1 - 1/4) = 1/2 * 2/3 * 3/4 = 6/30 = 1/4Now all I had to do is to modify this to account for multiple mob placement. Here’s a generalized function above which accounts for selecting

nelements from the sequence with even distribution.import random def get_random_element(sequence, n): """Select n random elements from a sequence of an unknown size.""" selected = [None for _ in range(n)] for k, element in enumerate(sequence): for i in range(n): if random.randint(0, k) == 0: selected[i] = element return selectedI incorporated logic above into the room generation code, accounted for duplicates, and ended up with decent distribution results.

-

Randomly generated dungeons

After playing with random dungeon generation for a bit, I seem to be satisfied with the results:

The above is displayed using ADOM notation -

#represents a wall,.is a floor tile, and+is meant to be a closed door. After fiddling about with a few random dungeon generation ideas, I settled with the following.The algorithm

- Start with a random point on a canvas.

- Create a room with random width and height. Don’t worry about walls yet.

- Select a few points on the sides of the room, put those in a stack. Save the direction in which the potential doors would point.

- Go through the stack, and try to add a room or a corridor (corridor is just a

room with a width or a height of 1). Higher chance of corridors seems to look

better and results in those wiggly passageways.

- Try to add a room a few dozen times with different random configurations. If no luck - give up and grab a new item from the stack. If couldn’t generate a continuation to the corridor - mark it as a loose end, we’ll clean those up later.

- If a room was added successfully - add a door where it connects to the previous room/corridor. Add a floor section if it’s a corridor connecting to another corridor.

- At this point one ends up with quite a few interconnected corridors, merged rooms, and all kinds of fun surroundings (my desired goal).

- Do the above until the stack is empty or a desired number of rooms has been generated.

- Clean up the loose ends from step 4.1. Remove loose corridor segments one by one until intersection with another room/corridor is detected.

- Add walls around the rooms and corridors, while also cleaning up any extra

doors we may have left behind when creating merged corridors or rooms.

- I simply used slightly modified depth first search starting inside any room and drawing walls wherever I could find floor/door not connected to anything.

- When encountering a door - check if it’s surrounded by walls or doors from the opposite sides. If not - remove the door and replace it with a floor tile. If any doors were adjucent to the removed door - requeue the door check on them.

- Perform steps 1-7 a few hundred times, saving the resulting dungeons each time. Pick the best candidate with the most desired features - like a number of rooms, breadth, square footage, longest corridors, etc.

A more detailed explanation of the steps is below. For now, here are a few more dungeons generated using this method:

I think dungeon generation is far more art than science, and I had a lot of fun tweaking all the different input parameters:

- Room size boundaries.

- Corridor lengths.

- Frequency of corridor occurrences.

- Number of exits from the room.

- Number of room generation attempts.

- Number of dungeon generation attempts.

- Final dungeon picking heuristics.

Last item on the list is the most interesting one - with few hundred dungeons as an input, picking the right one is rather important. I ended up settling on using max priority queue with a rough surface area of the dungeon as a key (it’s more of a breadth, really - how wide and tall it is). Then I’d sift through some top results and pick the one with the most rooms available. This results in the most fun-looking map which feels up most of the screen, while still usually not being too cluttered.

Here’s a breakdown of a simple scenario:

Steps 1 and 2

Pick a random spot on a canvas and generate a room of random size (4 x 3):

.... .... ....Step 3

Select potential spots for doors, let’s label them 1, 2, 3.

....2 .... ....1 3I went for a uniform distribution by unfolding the rectangle and folding it back in to get a proper coordinate on the perimeter. Now, stack contains coordinates of

[1, 2, 3](along with the directions in which they are pointing).Steps 4 and 5

Add a room or a corridor to a connector number 3. We’ll be adding the room to the right of number 3. Let’s assume random sends a corridor of length 5 our way. We’re happy with the corridor pointing either up, down, or right - so we let the random decide again: up.

4 . . ....2. .... . ....3. 1We add the end of the corridor to the stack as number 4 (now

[1, 2, 4]). We also mark 4 as a loose end, in case we end up not adding a room to it. Dangling corridors are never pretty.Now, to replace number 3 with a door:

4 . . ....2. .... . ....+. 1Adding another random corridor of length 2 to the point 4, pointing right. Replace number 4 with a floor segment, since point 4 was the end of another corridor. Remove point 4 from loose ends, add point 5.

...5 . . ....2. .... . ....+. 1Take point 5, generate a room of size 3 x 6. 5 becomes a door. Loose ends list is empty.

...+... . ... . ... ....2. ... .... . ... ....+. ... 1For simplicity’s sake, let’s assume we don’t want any more exits from this room. Back to the stack of

[1, 2]. Point 2 seem to not have much room for growth. After a few unsuccessful attempts to place a room or a corridor there, we give up:...+... . ... . ... .... . ... .... . ... ....+. ... 1Now for point 1: we get another corridor of length 3. Point 6 is now added to the loose ends list.

...+... . ... . ... .... . ... .... . ... ....+. ... + . . . 6Let’s assume we run out of space and can’t add anything to the end of 6. We’re done generating the dungeon. Our stack is empty, and our loose ends contains coordinates of

6.Step 6

Start with the loose end, and remove items one by one until a tile with multiple neighbors is encountered:

...+... . ... . ... .... . ... .... . ... ....+. ... X X X X XViola:

...+... . ... . ... .... . ... .... . ... ....+. ...Steps 7 and 8

There are no rogue doors in this scenario, so all we need to do is add the walls:

######### #...+...# #.###...# ######.# #...# #....#.# #...# #....#.# #...# #....+.# #...# ######## #####All of the steps above should be repeated a few hundred times with different dungeons, and then the best dungeon should be picked as a final one.

Did I miss anything? Was cleaning up “loose ends” too much of a hack? What should have I done differently?

-

Contributing to an existing Octopress blog

I had to download my Octopress blog to a new machine today and the process of setting up didn’t go as smoothly as I expected. At the end of the day the setup turned out to be simple, and here are the steps:

git clone -b source https://github.com/username/username.github.io myblog cd myblog git clone https://github.com/username/username.github.io _deploy bundle installIn a nutshell, you have to manually add

_deployfolder set to trackmasterbranch of your Octopress repository. Otherwiserake deploycommand fails.Happy writing!

-

One more argument for 80 character limit

Limiting code to 80 (or 100 or 120) characters per line. It’s a well-known topic, and there’s an overall consensus on the subject, not counting occasional questions by newbies and odd cases. Most established tech companies have their own line length guidelines. These are often dependent on a language, such as in the case of Google with their 80 character Python and 100 character Java limits.

In this article, I won’t be advocating all the usual arguments, such as easier diff with version control software, or the ability to stack windows side by side on wide screens. No, I believe that battle to be won quite a long time ago, the topic is now closed. But something I didn’t find mentioned in any of the discussions is an interesting point from the world of writers and designers.

Ever since I started being interested in improving my writing skills, I found article after article mention the importance of line length in reading. Interestingly enough, this issue was raised in a world of literature. It had been resolved long before programmers were fascinated with the desire to use up rapidly expanding screen real estate.

I am talking about something known as “measure” in typography. It seems to be the reason newspapers use narrow columns, or books leave such vast margins around the text on a page. Here’s an exempt from the Wikipedia article:

Measure (or sometimes “The Measure”) in typography is the length of a line of text. For a single-column design measure should ideally lie between 40 & 80 characters. Many typographers consider the perfect measure to be 65 characters. If the lines are too short then the text becomes disjointed, if they are too long the content loses rhythm as the reader searches for the start of each line. Punctuation should preferably hang outside the measure.

Most programming languages use special operators and keywords, which can be considered “punctuation”. Some languages are more verbose (use more punctuation), and some aren’t. If you remove punctuation, the 80/100/120 character limit perfectly fits the standard time-honed “measure”.

Maybe I can use this as an additional argument the next time I have to explain to a junior new hire why the character limits are so strictly enforced.

-

Abandoning WordPress for Octopress

I finally did it! I’ve left the dark corners of WordPress in favor of Octopress, which seem to be the best system for my blogging needs.

Why the migration?

WordPress was a great host for me at one point - the community is fun and no work is required for setup (I would rather not waste time fiddling with my blog too much). But a number of things are off-putting:

- I don’t like WYSIWYG editors and I don’t like web editors. I try to use my favorite editor for everything, including mail, documentation, note taking. Even though WordPress allowed me to write my posts using Markdown, I still didn’t like not being able to use my favorite editor or environment.

- Storing all the entries in a MySQL database is not favorable. I am writing text, why would I pick any other format to store what I wrote?

- Over the years my blog became increasingly slow, and in order to have a reasonably fast site I needed to pay quite a sum of money. I am not ready for such a commitment, since I only occasionally update this blog.

On the other hand, Octopress (which is based on Jekyll, a static site generator) has all of these concerns covered:

- I can write by creating simple Markdown documents. It doesn’t matter where I write, or how I write. This enables me to use an editor of my choice.

- Plain text is universal. It can be viewed by a human, edited in any piece of software, printed out, emailed, piped to a Linux program - just about anything really.

- Static sites are ridiculously fast. There’s no preprocessing and no rendering. The only real speed limit is network latency. In addition, GitHub lets you host static sites for free. What else could you ask for?

The process is painless

Switching from WordPress to Octopress was very easy, and it took me only a few hours (spread out throughout one weekend). Some highlights:

- Comments in Octopress are handled by Disqus, and I had to set that up on my WordPress blog before transitioning in order to transfer all the comments.

- I used the WordPress to Jekyll Exporter tool to export all my posts from WordPress to Markdown (with meta-data which Octopress understands). I only had a bit over 50 posts to worry about, so the process was complete in an instant.

- I ran some custom Vim macros to remove and change some excess meta data, as well as clean up Markdown formatting. I am a perfectionist, and the exporter tool left me with a poorly formatted document. I couldn’t let that happen.

Results are breathtaking

After only a few hours of work, I had a lightning fast, simple, and pragmatic blog. There is no excess configuration, comments are handled externally, and the source code for the site and articles is available on GitHub. I am able to write my posts using Markdown, in my favorite editor, and use git to publish the resulting articles.

-

Export WordPress posts to plain text

I prefer to create and edit my posts in plain text. It’s a timeless format, and I find it nice to have an archive of posts lying around in plain text.

I wrote a little Python script which I used to extract an archive of my posts and pages into a bunch of files on my computer. In order to use it, you need to use “WordPress Importer” plugin. Export your posts and pages into a WXR format, and feed the file to the script.

Source code of the script is below (link for downloading the script is at the bottom of this post):

#!/usr/bin/env python """This script converts WXR file to a number of plain text files. WXR stands for "WordPress eXtended RSS", which basically is just a regular XML file. This script extracts entries from the WXR file into plain text files. Output format: article name prefixed by date for posts, article name for pages. Usage: wxr2txt.py filename [-o output_dir] """ import os import re import sys from xml.etree import ElementTree NAMESPACES = { 'content': 'http://purl.org/rss/1.0/modules/content/', 'wp': 'http://wordpress.org/export/1.2/', } USAGE_STRING = "Usage: wxr2txt.py filename [-o output_dir]" def main(argv): filename, output_dir = _parse_and_validate_output(argv) try: data = ElementTree.parse(filename).getroot() except ElementTree.ParseError: _error("Invalid input file format. Can not parse the input.") page_counter, post_counter = 0, 0 for post in data.find('channel').findall('item'): post_type = post.find('wp:post_type', namespaces=NAMESPACES).text if post_type not in ('post', 'page'): continue content = post.find('content:encoded', namespaces=NAMESPACES).text date = post.find('wp:post_date', namespaces=NAMESPACES).text title = post.find('title').text date = date.split(' ')[0].replace('-', '') title = re.sub(r'[_]+', '_', re.sub(r'[^a-z0-9+]', '_', title.lower())) if post_type == 'post': post_filename = date + '_' + title + '.txt' post_counter += 1 else: post_filename = title + '.txt' page_counter += 1 with open(os.path.join(output_dir, post_filename), 'w') as post_file: post_file.write(content.encode('utf8')) post_counter += 1 print "Saved {} posts and {} pages in directory '{}'.".format( post_counter, page_counter, output_dir) def _parse_and_validate_output(argv): if len(argv) not in (2, 4): _error("Wrong number of arguments.") filename = argv[1] if not os.path.isfile(filename): _error("Input file does not exist (or not enough permissions).") output_dir = argv[3] if len(argv) == 4 and argv[2] == '-o' else os.getcwd() if not os.path.isdir(output_dir): _error("Output directory does not exist (or not enough permissions).") return filename, output_dir def _error(text): print text print USAGE_STRING sys.exit(1) if __name__ == "__main__": main(sys.argv)You can download the script from here: wxr2txt.py.

-

Python: "ignored" context manager

There was a recent fantastic addition to Python 3.4 by Raymond Hettinger:

contextlib.ignored. It’s a context manager which lets you shorten the following often-occurring pattern:try: os.remove('i_probably_do_not_exist.txt') except OSError: passAnd turn it into this:

with ignored(OSError): os.remove('i_probably_do_not_exist')Much shorted and prettier. But, as probably most of engineers, you have to use older version of python in production. That’s where this little chunk of code comes in. Create a little

compat(as in “compatibility”) library and add following function:import contextlib @contextlib.contextmanager def ignored(*exceptions): try: yield except exceptions: passBeautiful!

UPDATE: As Andy pointed out in comments, this context manager has been renamed to

contextlib.suppress(https://bugs.python.org/issue19266). -

Making Django and Lettuce play nice together

Lettuce is a great BDD tool which allows you to parse expressions written via Gherkin syntax in python. However the documentation is not very comprehensive, and at the moment current version (0.2.19) has some issues integrating with the latest Django (1.6.1 at the moment of writing). Without further ado, I’ll get to a comprehensive tutorial.

Let’s assume you are using

pipandvirtualenvfor the dependency control, and you already have a working project configured. Your project is called “myproject”, and the only app you have within your project is called “polls”.Setup

First, you have to install

lettucelibrary. At the moment of writing, current released version (0.2.19) has an error integrating with Django, so we’ll install current development version. Releases 0.2.20 and up should include the fix, sopip install lettucewould be better if the version is out.pip install -e \ git://github.com/gabrielfalcao/lettuce@cccc397#egg=lettuce-master pip install django-nose splinter pip freeze > requirements.txtFirst line downloads lettuce package from the github repository and installs missing dependencies. You can replace

cccc397with the current commit. Technically commit can be omitted, but we don’t want to have an unstable ever-changing branch in ourrequirements.txt. I also addeddjango-nosesince nose assertions come in handy while writing Lettuce steps, as well assplinter, which is a great tool for testing web application.Add Lettuce to the

INSTALLED_APPSin yourmyproject/settings.py:INSTALLED_APPS = ( 'django.contrib.auth', 'django.contrib.contenttypes', 'django.contrib.sessions', 'django.contrib.sites', 'django.contrib.messages', 'django.contrib.staticfiles', 'django.contrib.admin', 'django.contrib.admindocs', # ... third party apps ... 'lettuce.django', 'myproject', 'polls', )You also have to explicitly specify the apps you want to use with lettuce:

LETTUCE_APPS = ( 'polls', )By default, lettuce will run its’ tests against your default database. But we want to use test database for that, so we have to add few more settings:

LETTUCE_TEST_SERVER = 'lettuce.django.server.DjangoServer' LETTUCE_SERVER_PORT = 9000Where

LETTUCE_TEST_SERVERis a subclass of Django’sLiveTestServerCase- a class which runs a test server for you andLETTUCE_SERVER_PORTis different from port 8000 so you won’t have issues running the development server viapython manage.py runserverat the same time as running Lettuce tests.You also have to create a

featuresdirectories inside the apps you want to test with Lettuce:/myproject /myproject __init__.py settings.py urls.py wsgi.py /polls /features /steps __init__.py polls_list.py polls_list.feature __init__.py models.py tests.py views.py manage.py requirements.txt terrain.pyLettuce has its’ own settings file called

terrain.py. It has to be in the same directory as amanage.py:from django.core.management import call_command from django.conf import settings from lettuce import before, after, world from splinter.browser import Browser @before.each_scenario def flush_database(scenario): call_command('flush', interactive=False, verbosity=0) @before.each_scenario def prepare_browser(scenario): world.browser = Browser() @after.each_scenario def destroy_browser(scenario): world.browser.quit()This code flushes the database before each scenario, as well as prepares and destroys the

splinterbrowser.Writing the features

Feature files support standard Gherkin syntax, let’s write one right now in

polls/features/polls_list.feature:Feature: Polls list Scenario: Polls list without any polls When I access the "polls list" url Then I see a text "We didn't find any polls!" Scenario: Polls list with one poll Given a poll with the title "Hello world" When I access the "polls list" url Then I see a text "Hello world" And I do not see a text "We didn't find any polls!"Now describe the steps in

polls/features/steps/polls_list.py:from django.core.urlresolvers import reverse from lettuce import step, world from lettuce.django import django_url from nose.tools import assert_in, assert_not_in from polls.models import Poll PAGES = { "polls list": "polls:list" } @step(r'access the "(.*)" url') def access_the_url(step, name): world.browser.visit(django_url(reverse(PAGES[name]))) @step(r'see a text "(.*)"') def see_a_text(step, text): assert_in(text, world.browser.html) @step(r'a poll with the title "(.*)"') def create_a_poll_with_the_title(step, title): poll = Poll.objects.create(title=title) polls.save() @step(r'do not see a text "(.*)"') def do_not_see_a_text(step, text): assert_not_in(text, world.browser.html)Running the tests

Now, you can run

python manage.py harvest --test-serverto run the tests you just wrote:$ python manage.py harvest --test-server Creating test database for alias 'default'... Django's builtin server is running at 0.0.0.0:9000 Feature: Polls list Scenario: Polls list without any polls When I access the "polls list" url Then I see a text "We didn't find any polls!" Scenario: Polls list with one poll Given a poll with the title "Hello world" When I access the "polls list" url Then I see a text "Hello world" And I do not see a text "We didn't find any polls!" 1 feature (1 passed) 2 scenarios (2 passed) 6 steps (6 passed) Destroying test database for alias 'default'...Don’t forget the

--test-serverswitch - otherwise Lettuce will run tests against your default database.Sources

You can find some more details on Lettuce and Django integration here: Web development fun with Lettuce and Django.

Update

Rather than using

--test-serverswitch, it’s easier and safer to set a flag in yoursettings.py(suggested by Michel Sabchuk):LETTUCE_USE_TEST_DATABASE = TrueThis way you won’t end up accidentally erasing your production database after forgetting to add

--test-serverflag. -

Python doctests and decorators bug

Now, this as well might be a feature, but doctest strings are not being executed for decorated functions (at least in version 2.7). However, there is a workaround.

You need to decorate your functions with

functools.wrapswithin a decorator to import docstrings into a decorator scope.#!/usr/bin/env python from functools import wraps def decorator(func): @wraps(func) def wrapper(): return func() return wrapper @decorator def foo(): """ >>> foo() False """ return True import doctest doctest.testmod()Now you can see this test failing, where otherwise it would have been ignored:

********************************************************************** File "decorator.py", line 12, in __main__.foo Failed example: foo() Expected: False Got: True ********************************************************************** 1 items had failures: 1 of 1 in __main__.foo ***Test Failed*** 1 failures. -

Python tests with doctest and unittest

When it comes to tests,

doctestis a great simple module to write tests for your application. However it is pretty basic and does not have any extended features like, for example, centralized unit tests. If you have multiple modules with doctests (and you probably do) you most likely want to be able to run all doctests recursively from one place. That’s whereunittestcomes in.Let’s assume we store modules in the

lib/directory:$ ls lib/ __init__.py bar.py foo.pyHere are the contents of

foo.pyandbar.pyrespectfully:def foo(): """ >>> foo() False """ return False def bar(): """ >>> bar() True """ return True def baz(): """ >>> baz() False """ return FalseNow, to run all tests we need a wrapper script. Let’s call it:

runtests.py:#!/usr/bin/env python import unittest import doctest import os files = [] root_dir = 'lib/' for root, _, filenames in os.walk(root_dir): for filename in filenames: if filename == '__init__.py' or filename[-3:] != '.py': continue f = os.path.join(root, filename) f = f.replace('/', '.') f = f[:-3] files.append(f) suite = unittest.TestSuite() for module in files: suite.addTest(doctest.DocTestSuite(module)) unittest.TextTestRunner(verbosity=1).run(suite)This approach invokes the

doctest.DocTestSuitemethod, which converts doctests strings into unittest suites. Time to run our tests:$ chmod +x runtests.py $ ./runtests.py ... ---------------------------------------------------------------------- Ran 3 tests in 0.008s OKAnd just to be sure that approach actually works, let’s make one of the tests fail:

$ ./runtests.py .F. ====================================================================== FAIL: baz (lib.bar) Doctest: lib.bar.baz ---------------------------------------------------------------------- Traceback (most recent call last): File "/usr/lib/python2.7/doctest.py", line 2201, in runTest raise self.failureException(self.format_failure(new.getvalue())) AssertionError: Failed doctest test for lib.bar.baz File "/home/rosipov/unitdoc/lib/bar.py", line 8, in baz ---------------------------------------------------------------------- File "/home/rosipov/unitdoc/lib/bar.py", line 10, in lib.bar.baz Failed example: baz() Expected: True Got: False ---------------------------------------------------------------------- Ran 3 tests in 0.009s FAILED (failures=1) -

pygame.font not found

I had an issue with pygame not being able to find a dependency for the font module. After quite a time-consuming search the missing package name was

libsdl-ttf2.0-dev.Hope this helps someone.

-

Rails and MongoDB with Cygwin

Setting up Ruby on Rails with MongoDB on a Windows machine.

You need to have cygwin installed with

rubyandgitpackages (obviously you may want to have more).The following commands are executed in the cygwin prompt:

git clone git://github.com/rubygems/rubygems.git cd rubygems/ ruby setup.rb gem install railsGo to the MongoDB website and download Windows binaries: http://www.mongodb.org/downloads. Extract the content of the

bin/directory toC:\cygwin\usr\local\bin.Create a directory for the db files (the default MongoDB db files directory is

C:\datadb):cd /cygdrive/c mkdir data mkdir data/dbDone! Both mongo and rails are in your cygwin’s path now, feel free to tweak it as you see fit.

-

C strtok usage example

I had some issues with the

strtokfunction. Here’s a detailed explanation and usage for it.The

strtokfunction is used to tokenize a string and thus separates it into multiple strings divided by a delimiter.#include <stdio.h> #include <string.h> int main() { int j, i = 0; char delim[4]; char user_input[81], *token[80]; user_input[0] = 0; // set first byte to 0 to detect empty string // validate the string length while (strlen(user_input) <= 1 || strlen(user_input) > 82) { printf("Feed me a string to tokenize: "); fgets(user_input, sizeof(user_input), stdin); } printf("And a delimiter (up to 4 chars): "); fgets(delim, sizeof(delim), stdin); token[0] = strtok(user_input, delim); // first call returns pointer // to first part of user_input // separated by delim while (token[i] != NULL) { i++; token[i] = strtok(NULL, delim); // every call with NULL uses // saved user_input value and // returns next substring } for (j=0; j<=i-1; j++) { printf("%sn", token[j]); } return 0; }Let’s compile and execute it:

Feed me a string to tokenize: foo/bar/baz And a delimiter: / foo bar bazThe first call to

strtokreturns the pointer to the first substring. All the next calls with the first argument beingNULLuse the string passed at the first call and return the next substring. The function returnsNULLif no more substrings are available. -

Create gitolite repository

A reminder on how to initialize a fresh gitolite repository, assuming that gitolite has already been set up.

All actions are performed on a local machine. In this case:

~/gitolite-adminis admin repository,~/foois desired repository,rosipov.comis gitolite hostname. Commandvistands for the text editor, but you may use whichever editor you prefer.cd ~/gitolite-admin vi conf/gitolite.confAdd lines (obviously you may want to use individual users instead of @all):

repo foo RW+ = @allSave it. Next:

git add conf/gitolite.conf git commit -m "Add foo repo for @all" git pull --rebase && git push mkdir ~/foo cd ~/foo git init git remote add origin git@rosipov.com:foo.gitAdd some files at this point. In this example, only

.gitkeepis added.git add .gitkeep git commit -m "Initialize repo" git push origin masterThe new repository is all set up now.

-

C - Reallocating memory for the array

I just started delving into C and I had some issues with increasing the memory allocation size for my array. The best way to understand something - write a detailed explanation of the process. So here we go:

#include <stdio.h> #include <stdlib.h> // notice that array is a pointer to a pointer int append_array_element(int **array, int *array_length, int element) { *array_length += 1; *array = realloc(*array, *array_length * sizeof(int)); (*array)[*array_length - 1] = element; // [] has higher priority // then * in C's order of return 0; // operations }We have a pointer to the pointer to the array, pointer to the array’s length, and a value to append to the array. Array is passed as a pointer to pointer so we will be able to ‘repoint’ the original pointer to a new memory segment.

Let’s call the function a few times and see what it gives us.

int main() { int *array = NULL; int array_length = 0; append_array_element(&array, &array_length, 142); printf ("Our array with %d elementsn", array_length); printf("%dn", array[0]); append_array_element(&array, &array_length, 19); printf ("Our array with %d elementsn", array_length); printf("%dn", array[0]); printf("%dn", array[1]); return 0; }And the output is:

Our array with 1 elements 142 Our array with 2 elements 142 19You can never be sure when you work with memory, so let’s add some error handling in case the operation fails.

int append_array_element(int **array, int *array_length, int element) { *array_length += 1; // realloc() returns pointer or False if it fails void *_tmp_array = realloc(*array, *array_length * sizeof(int)); if (!_tmp_array) { printf("Error while trying to realloc memory!n"); return -1; } *array = _tmp_array; (*array)[*array_length - 1] = element; return 0; }Now we will be able to handle the crash on our own. But reallocation is not a very fast thing, so let’s double the amount of reallocated memory every time we run out of allocated memory. We’ll need one more variable to track the current allocated memory size.

int append_array_element(int **array, int *array_length, int *array_alloc_length, int element) { *array_length += 1; if (*array_length > *array_alloc_length) { if (*array_alloc_length == 0) { *array_alloc_length = 1; } else { *array_alloc_length *= 2; } void *_tmp_array = realloc(*array, *array_alloc_length * sizeof(int)); if (!_tmp_array) { printf("Error while trying to reallocate memory for an array!n"); return -1; } *array = _tmp_array; } (*array)[*array_length - 1] = element; return 0; }Let’s call it few times:

int main() { int *array = NULL; int array_length = 0; int array_alloc_length = 0; int i; for (i = 0; i < 10; i++) { append_array_element(&array, &array_length, &array_alloc_length, i); } printf("Our array with %d elementsn", array_length); for (i = 0; i < 10; i++) { printf("%dn", array[i]); } printf("Array length: %d, allocated elements: %dn", array_length, array_alloc_length); return 0; } Our array with 10 elements 0 1 2 3 4 5 6 7 8 9 Array length: 10, allocated elements: 16And for the fun of it, let’s do the same with 100 elements:

Our array with 100 elements 0 1 2 ... 97 98 99 Array length: 100, allocated elements: 128Let’s improve the function a little bit more - and we are good to go. Let’s say we have a dynamic array with 129 elements - but we will have allocated memory for 256 elements… It is 127 more then we need! We don’t want to be greedy, so let’s find a way to free up the memory.

#include <stdio.h> #include <stdlib.h> #include <stdbool.h> // I included this just to use boolean values with // neat true/false aliases int append_array_element(int **array, int *array_length, int *array_alloc_length, int element, bool is_last_element) { *array_length += 1; if (*array_length > *array_alloc_length || is_last_element) { if (is_last_element) { *array_alloc_length = *array_length; } else if (*array_alloc_length == 0) { *array_alloc_length = 1; } else { *array_alloc_length *= 2; } void *_tmp_array = realloc(*array, *array_alloc_length * sizeof(int)); if (!_tmp_array) { printf("Error while trying to reallocate memory for an array!n"); return -1; } *array = _tmp_array; } (*array)[*array_length - 1] = element; return 0; }That should be pretty straight-forward. And let’s call it two more times with 10 and 100 elements:

int main() { int *array = NULL; int array_length = 0; int array_alloc_length = 0; bool is_last_element; int i; int i_max = 10; for (i = 0; i < i_max; i++) { if (i == i_max - 1) { is_last_element = true; } else { is_last_element = false; } append_array_element(&array, &array_length, &array_alloc_length, i, is_last_element); } printf("Our array with %d elementsn", array_length); for (i = 0; i < i_max; i++) { printf("%dn", array[i]); } printf("Array length: %d, allocated elements: %dn", array_length, array_alloc_length); return 0; }And the results are:

Our array with 10 elements 0 1 2 3 4 5 6 7 8 9 Array length: 10, allocated elements: 10 Our array with 100 elements 0 1 2 ... 97 98 99 Array length: 100, allocated elements: 100Pretty neat, huh? Thank you for reading!