Category: Technology

-

AI and the new junior engineer

Being somewhat of an AI sceptic, but having to deal with the advent of AI tooling and its impact on engineering productivity is weird. As is often the case, the other day I was engaging in some AI fear mongering when chatting with my colleague Kate. We got into it, especially the concerns about the future of junior software engineer roles. She made a valid point that many other industries have already navigated significant shifts due to automation (Kate - you legend).

I thought about this before, but I’ve always dismissed the comparison due to the state AI tooling was in. Google practically forced all its engineers to use AI tooling far before it was ready, and many of us have burned out, and many of us have very strong opinions of how garbage this technology is. But over the past year alone we’re now at the point where AI models - with some guidance - can perform many junior development tasks - like putting together a simple website or writing a hyper specific tool. Oh, and shout-out to my then-manager-now-retired-friend Patrick - whose (exclusive, I am very fancy) newsletter encouraged me to dig into how AI tooling has changed. Thanks Patrick, I’ll probably send this to you.

In fact, Gemini Pro even helped me with an outline of this article. These are still my own thoughts, typed out with my own two hands (I find angrily typing out my thoughts to be therapeutic and the best part of writing). But there’s no writer’s block - I’m not staring at a blank document anymore. In front of me is a stub of an article that I hate with a passion, and this burning hatred for the AI-authored stub is what’s pushing me to write down my thoughts in one go.

You see, for a while I used to group AI with other tech trends like big data or blockchain - impactful technologies, but not something that fundamentally rewrote the playbook on software development. Yes, our databases are now order of magnitude larger, but we’re still using the same interfaces to engage with them - and it’s now just “data”. And blockchain, outside of niche problems, turned out to be a grift. No, AI is really closer to industrial revolution. And yeah, the news sources and many folks I know have been using these words have been saying this for years - but that just sounded too grandiose to be true. I couldn’t see the forest of changing job landscape for the trees of not-quite-yet mature models. But I think it’s true: the means of production will evolve.

Anyhow, Kate’s comments prompted me to look into how others adapted when their familiar landscapes changed.

The idea that “junior engineers won’t exist, so senior engineers won’t exist” has a simplistic appeal. However, it often overlooks how industries, and the people within them, actually progress. The software engineering career pipeline isn’t necessarily breaking - it’s just getting rerouted through unfamiliar territory.

Let’s be clear: the old model, where experience was often gained through highly repetitive tasks, was never perfect. It was simply the established way, the way of our ancestors: software engineers learn some math and not-particularly-useful concepts in college (or take a coding bootcamp, which is much faster, and in my experience, produces similar results), join a company, shadow more senior engineers, write some terrible code, make a few expensive mistakes for the company to absorb, and eventually graduate into productive developers of software. But like any system with inherent inefficiencies, this progression system was always susceptible to disruption.

This kind of transformation isn’t unique to software, and isn’t unique to AI. And in my brief research I found many examples - here are the three representative example - one where the career pathways evolved, one where that path was closed, and one where it became easier.

The factories

Let’s get the factory floor comparison out of the way - it’s not great, but it sort of works.. Getting to start in manufacturing meant working on an assembly line, performing a repetitive task hundreds of times a day, being noticed by the supervisor, and eventually being promoted up the chain. Throughout the later part of the twentieth century, industrial robots became a thing - these were big, dumb robots, but they could do what a worker did more consistently, sometimes faster, but most importantly - for a constant lower cost, and without sick days or unions.

The junior roles on the factory floor have changed - it’s now all about monitoring, maintaining, and troubleshooting the machines. Many jobs shifted from the factory floor to the office - you needed your CAD operators and technicians. The pathway to becoming a manufacturing professional is no longer about enduring years of menial back breaking labor: it’s all about understanding and interacting with automated systems.

The career ladder, while different, is still there for those who were willing to make the switch.

Opportunities aren’t better or worse - they’re just different.

Banking

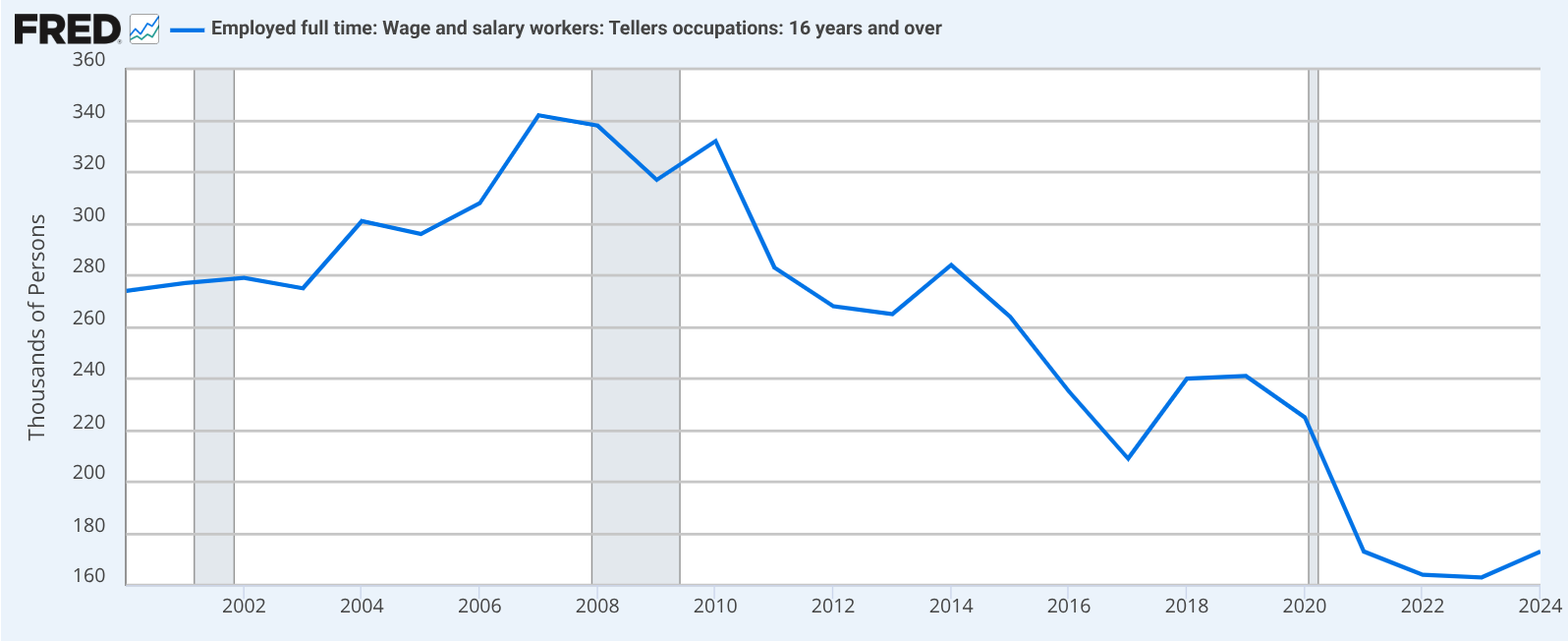

Bank tellers. For a long time bank teller was a potential entry point into the world of finance. Learn the procedures, handle cash, advance through the branch, and move onto corporate. Then the ATMs were introduced in mid-twentieth century (in a moment of vulnerability I’d like to admit that I didn’t realize that ATM stands for Automated Teller Machine). The need for accepting and dispensing cash has diminished.

The teller jobs changed. It wasn’t about counting bills (and in fact, if you’ve been to a bank recently and interacted with a teller - they don’t really count the cash for you). The job didn’t die out, but is mostly about customer service and selling products for the bank.

With a change to teller’s role - now mostly of a customer service representative and a salesperson - a direct path from teller to a finance analyst is mostly no more. A finance degree and proficiency with financial software are what’s advantageous here, with the teller counter experience becoming irrelevant.

The door closes. Actually, the door’s been bricked up altogether.

Photography

Now for something that might have actually gotten better for folks trying to break in: photography. Ask your parents (or grandparents if you’re Gen Alpha) – about becoming a pro photographer pre-digital. It was a mission: you needed a darkroom, which meant space and a boatload of chemicals that probably weren’t doing your health any favors. Equipment cost an arm and a leg, film was pricey, and developing film was a whole other cost and skill. You’d need to wait hours or days to see if those expensive shots were any good. Apprenticeships were common because the barrier to entry, both in cost and arcane knowledge, was sky-high.

Then digital cameras, especially affordable DSLRs in the 2000s and eventually decent smartphone cameras changed the industry. Suddenly there’s no more darkroom alchemy - with Photoshop your house no longer smelled like a chemistry set. The learning loop shortened from hours or days to seconds: take a picture - look at the screen - delete - take another picture, and abundant online tutorials make learning free and accessible for all. S

Even distributing your work is easier - no longer do you need to contact a publisher or beg a gallery to look at your prints. Instagram, Flickr (is that still a thing?), and other photo sharing sites allow you to reach global audience in a few clicks. Yes, the market’s flooded, and everyone and their mother are a photographer now. Experts still do stand out in the sea of amateurs, although it’s definitely much harder to do so. But the pathway to learning the craft and getting the audience has been democratized.

Knowledge is widely accessible, and entry costs are significantly lowered.

A common pattern emerges: the mundane, the highly repetitive, the work that served as “experience building” largely through sheer volume – that’s what tends to get automated. And as a software engineering manager, that’s what I usually leverage junior engineers for. And that’s not setting them up for success - oh no it’s not.

So, what does this mean for software engineering, and what can we practically do?

Think about the evolution of programming itself. We moved from wrestling with machine code and assembly to C, where we gained more expressive power but still had to meticulously manage memory. Then came languages like Python or Java, which abstracted away much of that lower-level complexity, allowing us to build applications faster and focus on different, often higher-level, business problems. Each step didn’t eliminate the need for smart engineers; it changed the nature of the problems they focused on and the tools they used.

This AI transition feels like another such leap - albeit somewhat more dramatic - and there are more tech bros, and some of the NFT drifters have joined too. Which, combined with the costs and rough edges, makes it harder for some of us to see the value behind the technology. Yet, we’re getting a new set of incredibly powerful, high-level tools. The challenge isn’t that the work disappears, but that the valuable work shifts to orchestrating these tools, designing systems that incorporate them, and solving the new classes of problems that emerge at this higher level of abstraction.

If the common understanding of a junior software engineer is someone who primarily handles boilerplate code, fixes very basic bugs, or writes simple unit tests that could be script-generated, then yes, that specific type of role is likely to disappear. That work was more about human scripting than deep engineering.

The career path isn’t vanishing; it’s demanding different skills, often earlier.

For junior developers

Frankly, the advice for junior developers hasn’t really changed. Unlike Steve Yegge, I still think you should learn Vim though.

- Learn the tools: Your early-career work will increasingly involve interacting with AI-generated code, understanding its structure, integrating it, and debugging the subtle issues that arise. Developing effective prompts, validating AI outputs thoroughly, and fine-tuning models for specific tasks will be valuable skills. This is still foundational work, but it requires a different kind of critical engagement.

- Get good at learning: Tools and AI models will evolve quickly. The ability to teach yourself new paradigms efficiently is crucial. Focus on the underlying principles of software development; they tend to have a longer shelf life than specific tools.

- Develop scepticism: Don’t implicitly trust AI outputs. Your value will increasingly stem from your ability to critically evaluate, test, and verify what machines generate. Seek to understand why a tool produced a particular result.

- Practice: Theory is essential, but practical application is where deep learning occurs. Use these new AI tools to build something non-trivial. The challenges you encounter will be your best teachers.

- Tread with caution: Your senior peers might be sceptical of AI tooling. There’s a lot of hype, and senior developers have been through many hype cycles - I know I have. Show, don’t just tell, and be prepared to defend your (AI-assisted) work rigorously.

For senior engineers and managers

These are the steps my peers and I should be taking to set up those in our zone of influence for success.

- Adapt: Your role isn’t to shield junior colleagues from AI but to guide them in using these powerful tools effectively and ethically. This requires you to understand these tools well. Mentorship will likely focus more on system-level thinking in an AI-augmented environment.

- Re-evaluate skills: Consider how AI changes the value of certain traditional skills. Is deep expertise in a task that AI can now perform rapidly still the primary measure, or is the critical skill now defining problems effectively for AI and integrating its solutions? Your metrics for talent should adapt.

- Engage with tools: To lead this transition, you need firsthand experience with these tools. Understand their capabilities, limitations, and common failure modes. This practical knowledge is essential for making informed decisions and guiding your teams.

- Redesign tasks: Phase out giving juniors only the highly repetitive tasks that AI can now handle. Instead, structure initial assignments around using AI tools for leverage, then critically evaluating, testing, and integrating the results. Their first “wins” might be in successfully wrangling an AI to produce a useful component, then hardening it.

- Teach AI skepticism: Don’t assume junior developers will know how to question AI. Make it a core part of their training. Ask them to find the flaws in AI suggestions, to explain why an AI output is good or bad, not just that it “works.”

True engineering skill has always been about problem decomposition, understanding trade-offs, systems thinking, debugging complex interactions, and delivering value (to shareholders). AI doesn’t negate these fundamentals; it changes the context in which we apply them and the leverage available.

New engineers often lack deep context and the experience of seeing systems fail. AI won’t provide that - in fact it’ll make it harder to spot a lack of fundamental understanding.

The path to seniority won’t be about avoiding AI; it will be about learning to master it, question its outputs, and work strategically with it. It will require fostering critical thinking and deep systems knowledge to use these powerful tools wisely.

The old ways of gaining experience will not disappear - they will evolve. The challenge is to define what skill looks like in this new landscape and ensure our learning pathways effectively build it.

The future will need senior engineers; they will be the junior engineers of today who navigated evolving technological terrain and learned to harness its potential. It’s time to start mapping that new path.

-

Looking back on small web communities

Staying on the theme of nostalgia, I’d like to talk about small web communities. This post is a part of IndieWeb blog carnival - an alternative form of independent, personally curated content aggregation on a specific topic. I think that’s a pretty neat idea, a throwback in itself to how I used to discover cool stuff. This month’s carnival is hosted by Chris Shaw.

The fact that “small web communities” is a nostalgic topic for me should speak volumes about how I engage with the web today – or perhaps, how I don’t in the same way. But let me start at the beginning.

My relationship with web communities kicked off in the mid-2000s. I was probably in middle school, and while I’d had a computer for a while, the internet wasn’t yet a fixture in our home. That wasn’t a huge deal; my digital world mostly revolved around video games anyway.

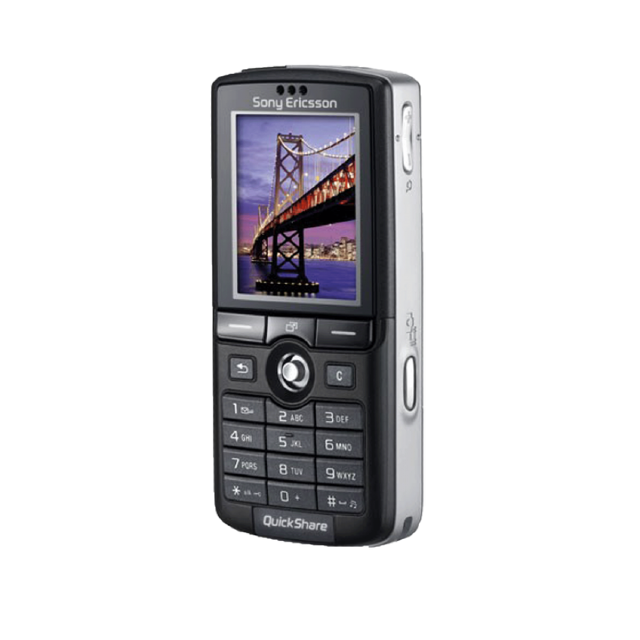

Then came the cell phone era. Suddenly, every kid at school had one, and my mom, bless her, got me one too – and a smartphone at that! I think it was the Sony Ericsson K750. Man, that thing felt like a marvel of technology. It played music, snapped pictures with its (then impressive) 2-megapixel camera, and – get this – it had a web browser! I could actually browse the web using (very expensive, very slow) mobile data.

It was on that tiny 176x220 pixel display that I stumbled into my first real online community. A schoolmate showed me this internet forum specifically built for phones. It was a godsend: low data requirements, images disabled by default (a blessing for my GPRS connection!), and topics covering a vast array of subjects – including, crucially, video games and tabletop gaming!

I became a frequent poster. I dived into countless threads, argued passionately about silly things (like Diablo II not really being a role-playing game - “you don’t play a role”), and even played through forum-based role-playing games. Quite frankly, I’m impressed I didn’t graduate school with carpal tunnel from all that WAP browsing (that’s Wireless Apllication Protocol if you’re not familiar). The other posters were mostly teenagers too, and over time, a small tabletop gaming community formed around that forum. There were maybe 20 of us, tops. We lived in different cities, went to different schools, but we were connected by our shared love of tabletop gaming, forum-based RPGs, and the low bandwidth afforded by our phones.

There was genuine camaraderie, a lot of laughter (typed out, of course), and the occasional burst of teenage drama (I vividly remember employing some social engineering to obtain my “rival’s” password and writing profanities on their profile page). It felt real.

Closer to the end of the 2000s, things escalated. I finally got dial-up at home. I lived in a small town, and dial-up was still pretty common there (a good reminder that I didn’t grow up in the US, so the pace of internet adoption was a bit different).

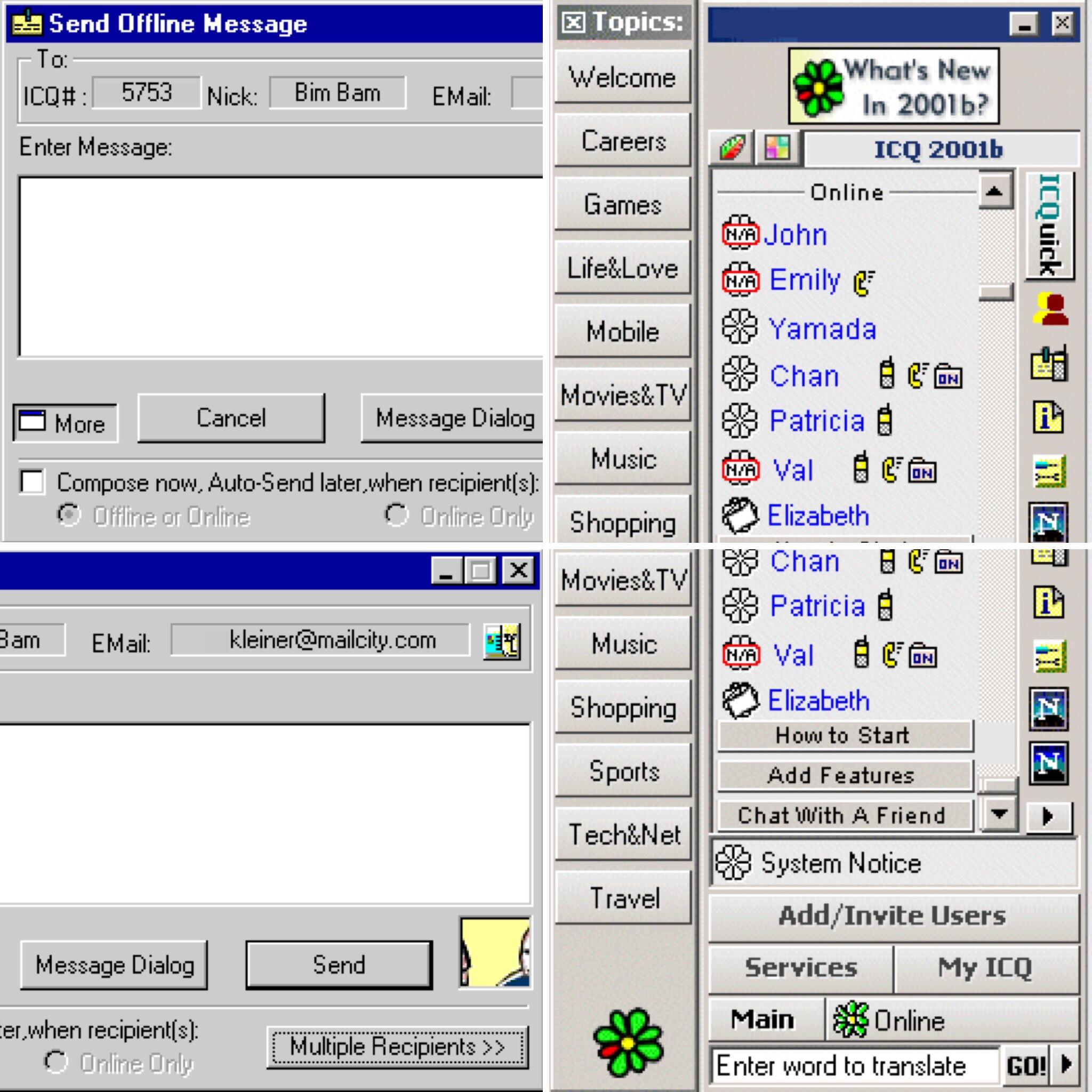

I was also neck-deep in my rebellious teenager phase – blasting heavy metal, rocking a black jacket and steel-toed boots (an outfit my ever-supportive mother actually bought for me to encourage my “rebellion”). MySpace was the place to be around that time, and I’d diligently seek out other alternative-looking teenagers from my town, add them as friends, and then the real conversations would happen over ICQ.

For those not in the know, ICQ was huge. It was kind of AOL Messenger’s cooler, more international predecessor. AOL didn’t really take off in Russia, but ICQ went on strong for ages – until being acquired by VK in 2010 and finally shutting down in 2024, ceasing its operation after a whopping 28 years. Long live the king, indeed.

As an aside, thinking about ICQ makes me a bit wistful for the era of interoperable protocols. Remember Jabber/XMPP? Google Talk ran on it, and you could use various clients to connect to different services, including ICQ gateways. There was this sense that you weren’t locked into one company’s ecosystem. You could pick your client, connect to your network, and chat with people regardless of what specific app they were using, as long as it used the same protocol. It felt like the web was more open, more… connected in a federated way. We’ve lost a lot of that. Now, everything is a walled garden, and I can’t help but feel that this shift has made it harder for those little, cross-platform communities to organically form and thrive. Everyone retreated into their own digital fortresses.

I spent countless afternoons after school, evenings, and late nights glued to that computer screen, chatting about the events of the day, school gossip, music, and, naturally, gaming - both tabletop and video games. Some of my ICQ friends were from out of town, people I’d never met in person, but they still felt like real people, tangible individuals on the other side of the screen.

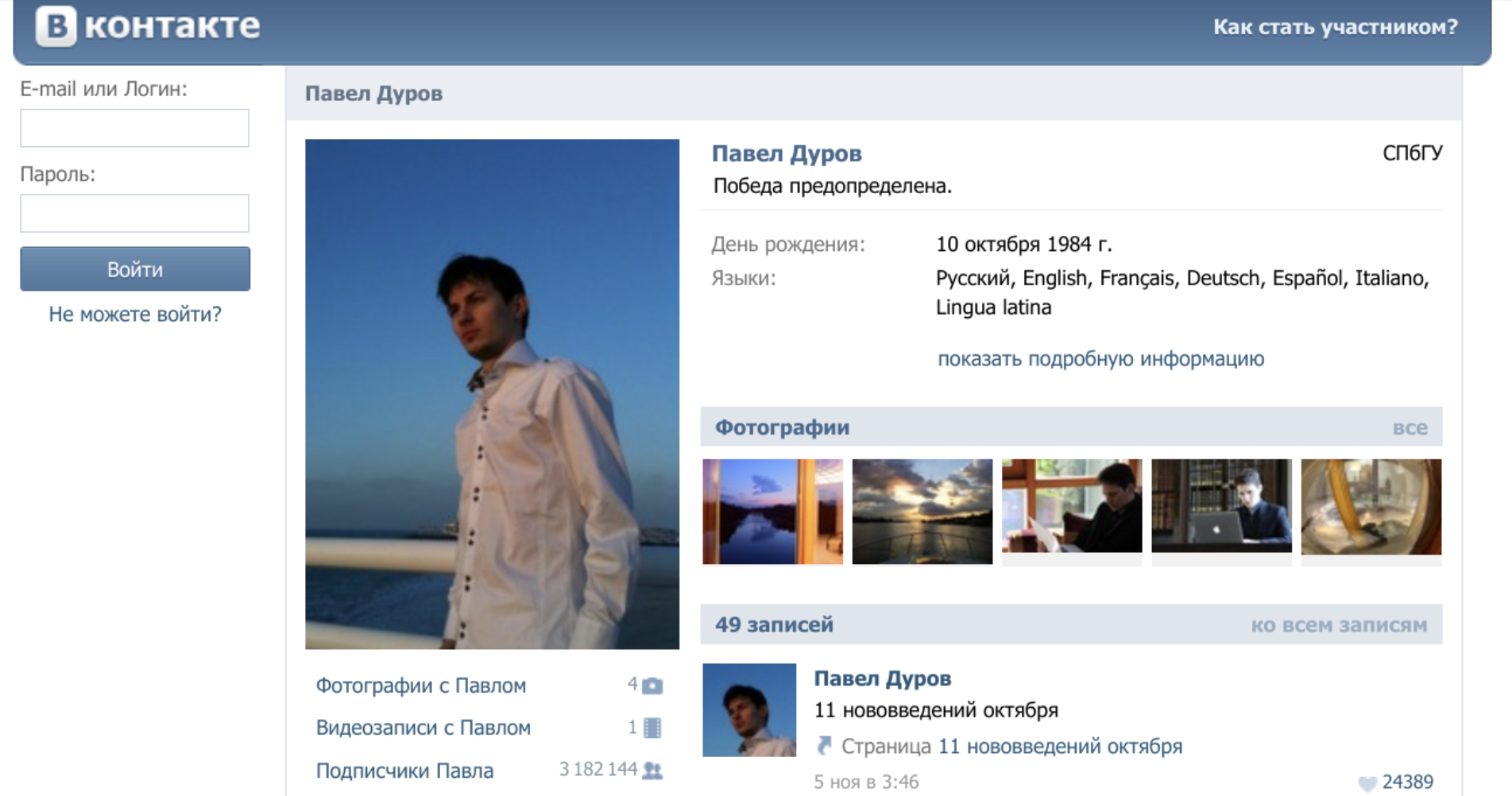

This brings me to VK (VKontakte), which really started to take off around that time in Russia, much like Facebook did elsewhere. These massive platforms were amazing in their own right – connecting everyone – but they also marked a shift. Suddenly, communication became centralized. Instead of seeking out niche forums or specific Jabber groups for your interests, you could just join a massive VK group with thousands, or even millions, of members.

It was convenient, sure. But something was lost. The intimacy of those smaller spaces started to fade. The signal-to-noise ratio went through the roof. While you could find more people, it became harder to find your people, or at least, to have the same kind of focused, tight-knit interactions. The algorithms started to decide what you saw, rather than the curated flow of a smaller, human-moderated community. It felt like the digital equivalent of a bustling, anonymous city replacing a cozy village. As I started using VK, I became connected with a lot more people, spent more time scrolling through algorithmic content, and the smaller forums I engaged in just sort of… faded out?

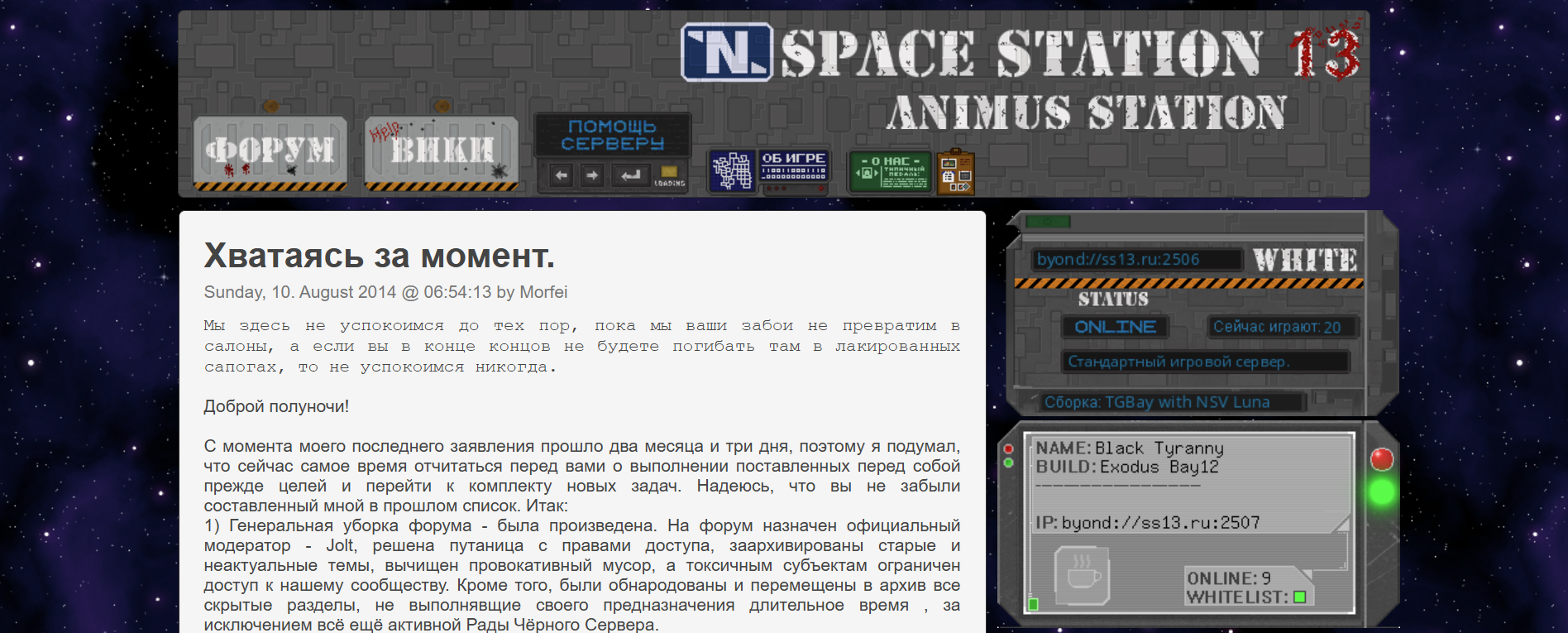

Despite this changing landscape, my journey into distinct online communities wasn’t completely over. Through someone I knew (no doubt from an ICQ chat), I discovered Space Station 13 – a game that really clicked with me. I dived headfirst into a close-knit Russian-speaking community for the game. I ended up becoming the custodian for the in-game wiki and eventually found myself heading up the server as a de-facto community leader. This was a noticeably larger community than my old forum haunts, with hundreds of active members, but the core crew of maybe 20-30 moderators and regulars maintained a very distinct (albeit, in retrospect, sometimes toxic) culture.

And again, the key thing was that each participant had their own personality – a distinct, real person on the other side of the screen. Some people were absolute good Samaritans, contributing to the wiki, the forum, or the server code. Others… well, others were notorious griefers who reveled in chaos. But they all felt like real people, because they were real people. Passionate, excited, sometimes infuriating, but undeniably real. There was drama around fudged moderator elections, complaints of hacking, tensions between role-players and munchkins… Yeah, real.

At some point, life happened. I moved to the US, my professional career started demanding more and more of my attention, and I gradually disengaged from the Space Station 13 community. I think this was around 2011-2013. I spent a number of years here focused on my career and professional growth, and frankly, I didn’t really engage with any specific internet communities for a while. My free digital time was mostly soaked up by solo experiences – video games and shows. Many, many hours were lost to the landscapes of Skyrim and the Cylons of Battlestar Galactica.

I did try to recapture some of that old magic. I remember joining a few local or interest-based IRC channels, hoping to find that spark. But the audience was always much, much older than I was at the time, or the vibe just wasn’t right, and I had a hard time connecting with people. I didn’t stick around for long in any of them.

Eventually, like so many others, I started using Reddit and YouTube more and more. I began relying on upvotes from massive, amorphous communities and the ever-present algorithms to introduce me to content. And for a long time, I didn’t really feel the need to engage with people as intimately online as I once had.

Real-world friendships, connections, and experiences had also, thankfully, taken center stage.

And then, relatively recently, as I was writing about my nostalgia for the old web, I discovered the IndieWeb. And something about it – its philosophy, its community, its focus on owning your own content and connecting on a more personal level – reignited that old desire to be part of a concrete, intentional online community. I’m excited about the idea of your personal website being a part of the larger network, heavily encouraging communicating with and actively hyperlinking to other sites.

I’m engaging in IndieWeb chat, I added my blog to the IndieWeb Webring, and, as of this submission, I’m participating in an IndieWeb blog carnival.

It feels like coming full circle, in a way.

-

How a shredder brought me joy

I like staying organized, one could say a little too much (or as my therapist puts it, “mild OCD”). This is going to read like an ad, but that’s because I’m just really excited about having a shredder now.

In addition to keeping the house tidy and my digital life clean, this involves keeping all relevant mail scanned, backed up, and nicely organized. Despite paperless opt-ins, banks, hospitals, and any random Joe love to send me physical mail. So I scan the letters, and if the contents are sensitive, file them with the hope that I’ll find a way to safely dispose in the future.

And then there’s junk mail. Average American household receives 848 pieces or 40-41 pounds of junk mail a year (according to widely cited but ultimately lost ForestEthics report). And that’s just junk mail. That’s 16 pieces of junk mail a week. Thankfully I’ve been able to reign that in with PaperKarma to 1-2 pieces of junk mail a week, but it still builds up. By the way, PaperKarma one-time lifetime membership is absolutely worth it - best $59.99 I ever spent. That’s not an ad, I just really like the product.

All that to say is that I’ve built up a lot of sensitive documents that need to be safely disposed of. I don’t feel comfortable just throwing those in my residential trash bin: US Supreme court ruled that dumpster diving is not a criminal activity. And while I (hopefully) don’t have the enemies required for someone to specifically target my trash cans, I just don’t feel great having my banking information or medical records being, you know, out there. I could take my documents to local UPS or FedEx location, but I wanted to do something at home. Lo and behold, a shredder:

Now, I’m not a fan of Amazon Basics business model - copy top product in a category, bump up their own products in Amazon search results, undercut competitors by low prices (often selling at a loss), and then raise the prices once the competitors are out of business. But their shredder is pretty cool, so I got one. For only $42.99 I became a proud owner of a P-4 security standard Amazon Basics shredder.

What’s a P-4, you ask me? DIN 66399 / ISO 21964 (Wikipedia) conveniently outline the following shredder security levels:

Level Use case P-1 Non-sensitive documents, e.g. forms. P-2 Internal documents, e.g. memos. P-3 Personal information (e.g. addresses). P-4 Financial and tax records. P-5 Corporate balance sheets. P-6 Patents & R&D documents. P-7 Top secret intelligence documents. With each level increase, the pieces get smaller. Anything P-3 and under doesn’t seem sufficient for sensitive documents, specifically because they allow for documents to be cut up in strips of any length. Levels P-4 and up require smaller and smaller particle size.

P-4 lands on pieces 6 mm or smaller. At this point piecing a single document back together is effectively an impossible task. P-5 (pieces less than 2 mm) is an overkill for personal data, and comes with a huge jump in price. P-4 it is.

So here I am, having the time of my life with a Amazon Basics P-4 shredder. I’m sure there are other brands that could be better, or might follow more ethical practices - but this baby’s cheap, and it’s been a workhorse of the Osipov household. It can fill its attached bin before overheating, after which the little shredder needs to take a break for about an hour. But that’s a lot of documents!

I’ve been able to shred hundreds of scanned documents I stored, and guess what - this thing can even shred old credit cards (as long as they’re the plastic kind - sorry AMEX). I get giddy when I get mail because shredding things is just never-ending fun.

Yeah, I know it’s silly, but it’s such a therapeutic experience. There’s something magical about seeing your private information destroyed in front of your eyes. If you’re a dork like me, you should get a shredder. You’ll be a happier person.

-

Nostalgia for the old Web

I grew up on the Internet throughout the 2000s. I went to school, played Dungeons and Dragons and some collectible card games, and logged onto the forums after school. Me and a couple of my classmates were all on the same forums - and of course you’d learn about forums from your friends.

I didn’t speak English back then, and it was Russian speaking forums for me all the way. I’m not sure about now, but back then Runet (yup, that’s Russian Internet) infrastructure was lagging behind the English speaking world - dial-up was more prevalent, which informed the type of content that was popular. So my experience in the 2000s is probably reminiscent of someone’s experience in US in the 90s. Either way, it was the time only the nerdy kids were online.

This internet was a very different landscape from the Internet of today, with giant spaces like Facebook or Reddit. Back then, the communities were smaller, more tight-knit, and you’d know frequent posters. You’d know what troubles them, you’d know what their interests are - those were real people (or other kids, I guess), and you could really feel their existence on the other side of the screen.

There was something else there too. The Internet felt smaller, more intimate. The quality of discourse was better. You’d have these long forum threads going back and forth. Engagement on the Internet felt like a passion project, less commercialized. Webmasters proudly built bright (and sometimes jarring) websites. You proudly demonstrated your expert knowledge of emoji to express yourself. 🤓 It was a different time. It was a time before influencers, and passion was first and foremost. Building internet following wasn’t really a ticket to real-world fame and profit, and it felt more… pure?

In the late 2000s and early 2010s, I experienced this directly when I ran the Russian speaking server of Space Station 13 - a multiplayer spaceship crewmate simulation game (MandaloreGaming does a great job explaining what this game is, go watch it). Here’s the website I built:

I don’t want to toot my own horn too much, but doesn’t this just have more character? Facebook and other sites were already becoming popular around that time, but it was amazing having our own corner of the Internet. We had forums, a chat, a wiki… Now that I reflect, the community could get somewhat toxic, but it was a community nonetheless.

Of course, I feel so nostalgic about the time period in my life. I was a kid, I didn’t have to worry about paying taxes. I naturally maintained friendships because I went to school every weekday. I could obsess over the craziest things. But separate from that general nostalgia, the internet itself truly felt different.

There are still some online communities that have refused the change. From small niche interest forums, to an old-time giants like The Something Awful Forums. And of course IRC is still alive and kicking. I pop in and out of IRC chats every couple of years when I miss simple no-bloat chat spaces with fellow tech enthusiasts. Did you know that besides tech enthusiasts, IRC is widely used by site reliability engineers, at least at Google? Can’t be relying on bloated chat apps during an outage - and nothing beats IRC!

It’s even more interesting to see communities who try to recapture the olden days. There are folks engaging today, trying to follow the old ways. Projects like Melonland aim to capture the feeling of the old web. The fun part is that, at least from some discourse, this movement seems to be popular among the younger generation - folks who didn’t live through the early days of the Internet. It’s part of a small but growing online movement around late Gen Z and early Gen Alpha’s striving for digital minimalism.

For me it’s largely about the nostalgia and simplicity. A different time, but also the Internet that put self expression first and foremost, before monetization underlining every word. It’s a time where you had to seek out content to engage with, and not the other way around (looking at you, algorithmic feeds).

-

Back up your digital life

Our digital life increasingly exists primarily in the cloud. Documents, photos, emails, passwords: all of this resides in the cloud. And be it One Drive, iCloud, Google Drive, or Dropbox - you don’t really own any of it.

We trust these companies with our digital life and take their reliability for granted, but it’s worth remembering that nothing in this world is a guarantee. The likelihood of an outright failure of these services is relatively low; Google, for example, stores copies of all data in data centers across three geographic locations (often across multiple regions). Microsoft, Amazon, Apple and other giants follow equivalent policies. The real threat with this storage is bureaucracy. Your account can be erroneously flagged and banned: automated systems that constantly scan for policy violations aren’t perfect and can misfire. Your account can get hacked, even with a strong password and two factor authentication. Navigating account restore process and getting access back can take weeks, months, or be altogether impossible.

Because of this, local backups are critical if you care about your data - which you probably do.

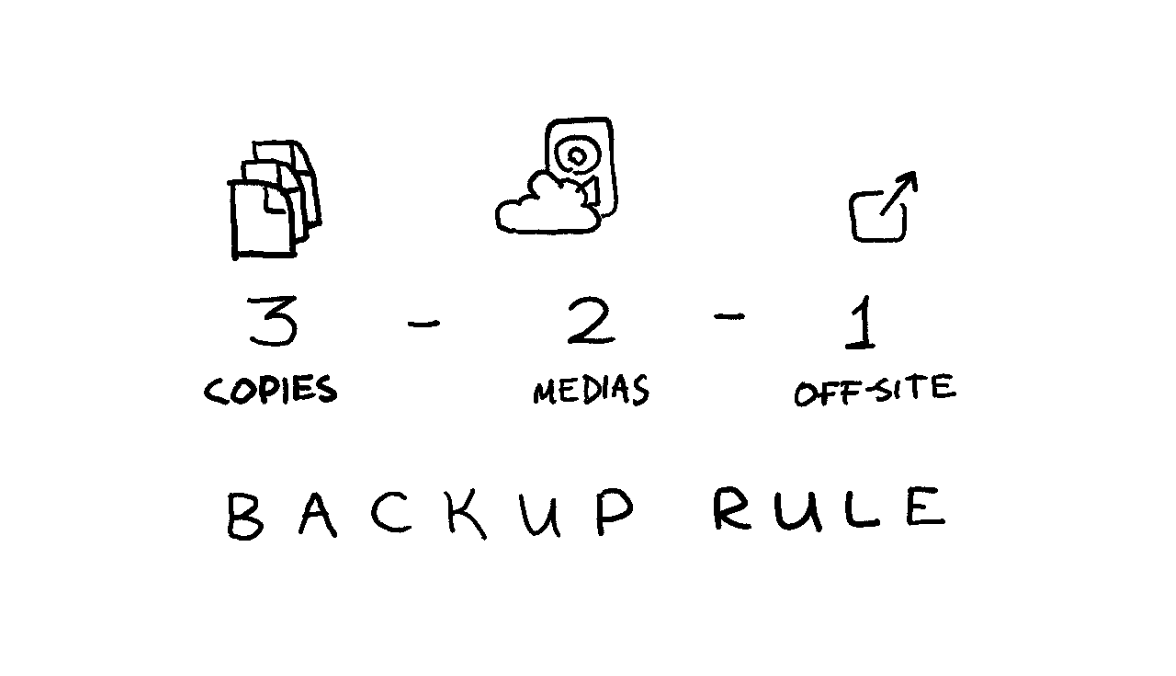

3-2-1 backup strategy

A common, straightforward, and widely used rule of data backups is referred to as the “3-2-1 rule”:

- Keep three copies of your data.

- Use two types of media for storage.

- Keep one copy off-site.

In fact, you’ll be hard-pressed to find a cloud service provider who doesn’t subscribe to some (likely more complex) variation of this rule.

We’ll satisfy the 3-2-1 backup rule with a dedicated backup drive and a home computer you likely already have:

- ✔️ Keep three copies of your data: (1) in the cloud, (2) on a dedicated backup drive, and (3) on a home device you already have.

- ✔️ Use two types of media for storage: (1) in the cloud, and (2) on our backup drive.

- ✔️ Keep one copy off-site: you’ll keep your backup drive at home, which is a different location from the cloud data centers.

Use an external HDD for backups

Solid state drives - SSDs - are all the rage today: they’re blazingly fast and have become relatively affordable. But you don’t want an SSD for a backup: SSDs reliability isn’t great when left unpowered: with the low end of failures occurring at merely the one-year mark. And since it’s a backup, you’ll want to leave it unpowered.

No, for this you’ll want a hard drive - an HDD. We’ll be trading read/write speed for reliability. External hard drives are affordable, don’t need to be powered to store the data, have been around for ages, and degrade more slowly. A quality hard drive should be mostly reliable for 5-7 years, and can be repurposed to a tertiary backup storage after that. Set a reminder in the future for yourself to do that.

Finally, some data can be recovered from a failed HDD, while failed SSDs are largely unrecoverable.

Survey your space needs, and use a hard drive a few times the size for scalability. I use a 4 Tb Seagate portable HDD, and it’s working just fine.

Use an existing device for tertiary storage

You likely already have some devices you could use at home. Maybe a laptop you’re currently using, or an old desktop tower you haven’t plugged in for years. Using this device will help ensure reliability and longevity of your data.

If you don’t have anything you can use, or your existing storage is too small - violating the 3-2-1 backup rule is better than having no backup at all. Use an external HDD, which you can downgrade to tertiary storage once you replace it in 5-7 years.

On encryption

Encrypting or not encrypting your backups is a personal choice.

You’ll likely be backing up important documents, which makes encryption critical for security. If the backup gets stolen, your whole life can be turned upside down (although this possibility still exists today if someone hacks into your cloud account).

However, because backups tend to live a long time, encryption can have downsides: tools can change, and most importantly you can forget your password. You also can’t decrypt a partially recovered backup: it’s all or nothing.

If you choose to encrypt, consider using established and mature open source encryption tooling like

gpg(I wrote about how to use GPG all the way back in 2012).It’s not all or nothing either: you can choose to only encrypt sensitive documents, but leave less sensitive media like photos, videos, or music unencrypted.

I do not encrypt my backups because I worry about forgetting my password by the time I need to recover the backup. I have a tendency to get in my own way: I couldn’t recover some writing I’ve backed up in 2012 because I couldn’t figure out what the password was. How fun.

Extracting data from Cloud

Internet giants allow you to download all your data in a fairly convenient manner. Google has Google Takeout, which lets you download data across services (Google Drive, Photos, email, etc). Apple allows you to request a copy of your data, and Microsoft allows you to submit a privacy request.

Don’t forget about other service providers who store your data like email providers or password managers.

Back up regularly

Set up a routine you’ll follow. For me, it’s every year. I won’t follow a more rigorous backup routine, and the trade-off of losing a year worth of data is worth the convenience of infrequent backups.

As our lives become more intertwined with the digital world, protecting your data is essential. By following the 3-2-1 backup strategy and using reliable storage, you can safeguard your data against unexpected mishaps. Regular backups and smart encryption choices will help keep your digital life secure and accessible. So, take a moment to set up your backups today - you’ll thank yourself later for the peace of mind that comes with knowing your data is safe.

-

Static websites rule!

I hope you’ve noticed that navigating to this page was quick (let’s hope that the Internet Gods are kind to me, and nothing stood in the way of you accessing this page). In fact, most pages on my blog - hopefully including this one - should render in under a second. I didn’t put any work into optimizing this site, and it’s not a boast, nor is it a technological marvel - this is just a good old fashioned static website.

If this is new to you - static website is just what it sounds like - static HTML and CSS files, sometimes with some light JavaScript sprinkled throughout. There’s no server side processing – the only bottlenecks are the host server speed, recipient’s connection speed, and the browser rendering speed. Page is stored as is, and is sent over as soon as it’s requested. This is how the Internet used to be in late 90s and early 2000s (with eclectic web design to boot, of course).

I think static websites are cool and aren’t used nearly enough, especially for websites that are, well, static. Think to the last website you’ve visited to read something - maybe a news site, or maybe a blog. Now did it take at least a couple of seconds for them to load? Likely. Did their server have to waste unnecessary cycles putting together a page for you? Most definitely. Now, contrast this with your experience with a static website like this one. Here’s the result from pagespeed.web.dev for this page:

Every render complete in under a second, and I didn’t have to put in any work into optimizing my website.

This site is built on a (now unsupported) Octopress, which is itself built on top of Jekyll. You write pages in Markdown, generate web pages using a pre-made template, and deploy the resulting pages to a hosting provider. In fact, GitHub Pages allow you to host your static website for free, and you can have a third party platform like Disqus provide comment support.

Static websites work great for portfolios, blogs, and websites that don’t rely on extensive common manipulation. They’re more secure (no backend to hack), simple to build and maintain, very fast even without optimization, and are natively SEO friendly (search engines are great at understanding static pages). Static websites are cheap to run - I only pay for a domain name for this site (under $20 a year).

If you have a blog or a portfolio and you’re using an overly complicated content management system to write - consider slimming down. Jekyll (or many of its alternatives) offers a number of pre-made off-ramps for major CMS users, is easy to set up, and is straightforward to work with. Can’t recommend enough - static websites rule!

-

Exercising online privacy rights

Following Europe’s 2016 General Data Protection Regulation (GDPR), California passed its own California Consumer Privacy Act (CCPA) in 2020. I won’t pretend to understand the intricacies of the law or the differences between the two, but from what I understand this gives you the right to know exactly what data of yours do businesses use, and request that this information is to not be sold or to be deleted.

As a California resident, I decided to dedicate a long weekend to exercising my privacy rights. The long weekend turned into a week worth of back and forth with a dozen-or-so companies, and me having a much better idea of what information about me is out there.

Turned out many large websites provide privacy dashboards where you’re able to review and see information collected or inferred about you. But most of this data is hidden behind a formal request process which takes a few days to a week.

First, I decided to stroll through Google’s privacy settings. There are two ways forward: privacy dashboard, or full-on Google Takeout. Google Takeout allows you to download an archive of everything Google has on you, which took a few days to process, and is near impossible to go through while keeping your sanity. So I decided to play with the privacy dashboard instead.

Google Maps has location history of most places I’ve visited for the past ten or so years (creepy, but I found it useful on more than one occasion), and YouTube and Search history stores thousands of searches. I already had Assistant history disabled, since storing audio recordings is apparently where I draw the line when it comes to privacy. Targeted ad profile was an interesting thing to look at, accurately summing up my lifestyle in 50 words or less. I ended up disabling targeted ads from Google (and all other services as I went about on my privacy crusade).

Google had some of the finest privacy controls compared to other services, with actionable privacy-leaning suggestions. Google’s not known for its services playing well together, but privacy is where Google feels closer to Apple experience - everything is in a single place, surfaced in the same format, easy to control, and plays well together. Given the amount of transparency and fine grained control, I feel pretty good staying in the Google ecosystem.

Next I looked at LinkedIn. Outside of the expected things – emails, phone numbers, messages, invitations, and a history of just about everything I’ve ever clicked on, a file labeled “inferences” stood out. Whether LinkedIn thinks you’re open to job seeking opportunities, or what stage of career you are in, or if you travel for businesses, or if you’re a recruiter or maybe a senior leader in your company.

Since LinkedIn is a professional network, all information I share is well curated and is meant as public by default – and I found LinkedIn privacy settings in line with my expectations.

As an avid gamer, I went through Steam, Good Old Games, Ubisoft, Epic Games, and Origin privacy details. Unsurprisingly, the services tracked every time I launched every game, shopping preferences, and so on. Thankfully the data seemed confined to the world of gaming – which made this level of being creepy somewhat okay in my book.

I also looked at random websites I use somewhat frequently – Reddit, StackOverflow, PayPal, Venmo, AirBnB, and some others – not too many surprises there, although I did end up tightening privacy settings and opting out of personal data sharing and ad tracking for every service.

Last year I requested deletion of all my data on Mint, Personal Capital, and YNAB (You Need a Budget), and to be honest I’m a little relived that I didn’t have to look at the data these companies had on me.

Amazon data sharing turned out to be the scariest finding. Until now I didn’t really self-identify as a heavy Amazon user, but that turned out to be a lie: Prime shopping, Kindle, Audible, Prime Video.

The amount of data Amazon kept on me was overwhelming: Kindle and Audible track every time I read, play, or pause books, the Amazon website keeps full track of browsing habits, and Prime Video has detailed watch times and history. Most of this data ties back into real world – including nearly every address I ever lived at or phone numbers I had.

Even scarier, despite never using Alexa, I found numerous recordings of my voice from close to a decade ago – me checking status of the packages, but a few of me just breathing and walking around. I found no way of deleting these, as they didn’t show up in any privacy settings (including me installing an Alexa app just to get into privacy settings).

All of this gave me pause. It feels like the privacy controls are either lacking, hidden, or spread out thin across Amazon’s various apps. And I’ve only briefly scanned through the data Amazon had on me.

That’s where I had to take a break.

I have accounts with hundreds of services, and I have no idea how my personal data is used, and what it’s joined with. As I’m go on about my daily life, I’ll start tightening privacy controls, and maybe deleting services and their data where possible.

It’s just too creepy for my taste.

While you have control over the services you have accounts for, companies and ISPs collect a trove of private information on you even while you’re not logged in. For that, I strongly recommend using a VPN. I’ve been using PIA since 2019 and I’ve been very happy with it. Wholeheartedly recommend.

-

Vortex Core 40% keyboard

This review is written entirely using Vortex Core, in Markdown, and using Vim.

Earlier this week I purchased Vortex Core - a 40% keyboard from a Taiwanese company Vortex, makers of the ever popular Pok3r keyboard (which I happen to use as my daily driver). This is a keyboard with only 47 keys: it drops the numpad (what’s called 80%), function row (now we’re down to 60%), and the dedicated number row (bringing us to the 40% keyboard realm).

Words don’t do justice to how small a 40% keyboard is. So here is a picture of Vortex Core next to Pok3r, which is an already a small keyboard.

At around a $100 on Amazon it’s one of the cheaper 40% options, but Vortex did not skimp on quality. The case is sturdy, is made of beautiful anodized aluminum, and has some weight to it. The keycaps this keyboard comes with feel fantastic (including slight dips on

FandJkeys), and I`m a huge fan of the look.I hooked it up to my Microsoft Surface Go as a toy more than anything else. And now I think I may have discovered the perfect writing machine! Small form factor of the keyboard really compliments the already small Surface Go screen, and there’s just enough screen real estate to comfortably write and edit text.

I’ve used Vortex Core on and off for the past few days, and I feel like I have a solid feel for it. Let’s dig in!

What’s different about it?

First, the keycap size and distance between keys are standard: it’s a standard staggered layout most people are used to. This means that when typing words, there is no noticeable speed drop. In fact I find myself typing a tiny bit faster using this keyboard than my daily driver - but that could just be my enthusiasm shining through. I hover at around 80 words per minute on both keyboards.

That is until it’s time to type “you’re”, or use any punctuation outside of the

:;,.<>symbols. That’s right, the normally easily accessible apostrophe is hidden under the function layer (Fn1 + b), and so is the question mark (Fn1 + Shift + Tab).-,=,/,\,[, and]are gone too, and I’ll cover those in due time.On a first day this immediately dropped my typing speed to around 50 words per minute, as it’s completely unintuitive at first! In fact, I just now stopped hitting

Enterevery time I tried to place an apostrophe! But only after a few hours of sparingly using Vortex Core I’m up to 65 WPM, and it feels like I would regain my regular typing speed within a week.Despite what you might think, it’s relatively easy to get used to odd key placement like this.

Keys have 4 layers (not to be confused with programming layers), and that’s how the numbers, symbols, and some of the more rarely used keys are accessed. For example, here’s what the key

Lcontains:- Default layer (no modifiers):

L Fn1layer:0Fn1 + Shiftlayer:)Fnlayer:right arrow key

The good news is that unlike many 40% keyboards on the market (and it’s a rather esoteric market), Vortex Core has key inscriptions for each layer. Something like Planck would require you to print out layout cheatsheets while you get used to the function layers.

As I continue attempting to type, numbers always take me by surprise: the whole number row is a function layer on top of the home row (where your fingers normally rest). After initially hitting the empty air when attempting to type numbers, I began to get used to using the home row instead.

The placement mimics the order the keys would be in on the number row (

1234567890-=), but1is placed on theTabkey, while=is on theEnter. While I was able to find the numbers relatively easily due to similar placement, I would often be off-by-one due to row starting on aTabkey.Things get a lot more complicated when it comes to special symbols. These are already normally gated behind a

Shift-press on a regular keyboard, and Vortex Core requires some Emacs-level gymnastics! E.g. you need to pressFn1 + Shift + Fto conjure%.Such complex keypresses are beyond counter-intuitive at first. Yet after a few hours, I began to get used to some of the more frequently used keys:

!isFn1 + Shift + Tab,-isFn1 + Shift + 1,$(end of line in Vim) isFn1 + Shift + D, and so on. Combining symbols quickly becomes problematic.It’s fairly easy to get used to inserting a lone symbol here and there, but the problems start when having to combine multiple symbols at once. E.g. writing an expression like

'Fn1 + Shift + D' = '$'above involves the following keypresses:<Fn1><Esc> F N <Fn1><Tab> <Fn1><Shift><Enter> S H I F T <Fn1><Shift><Enter> D <Fn1><Esc> <Fn1><Enter> <Fn1><Esc> <Fn1><Shift>D <Fn1><Esc>. Could you image how long it took me to write that up?

The most difficult part of getting used to the keyboard is the fact that a few keys on the right side are chopped off:

'/[]\are placed in the bottom right of the keyboard, tobnm,.keys. While the rest of the layout attempts to mimic the existing convention and only shifting the rows down, the aforementioned keys are placed arbitrarily (as there’s no logical way to place them otherwise).This probably won’t worry you if you don’t write a lot of code or math, but I do, and it`s muscle memory I’ll have to develop.

There are dedicated

DelandBackspacekeys, which is a bit of an odd choice, likely influenced by needing somewhere to place theF12key - function row is right above the home row, and is hidden behind theFn1layer.Spacebar is split into two (for ease of finding keycaps I hear), and it doesn’t affect me whatsoever. I mostly hit spacebar with my left thumb and it’s convenient.

Tabis placed where theCaps Lockis, which feels like a good choice. After accidentally hittingEsca few times, I got used to the position. Do make sure to get latest firmware for your Vortex Core - I believe earlier firmware versions hides Tab behind a function layer, defaulting the key toCaps Lock(although my keycaps reflected the updated firmware).So I’d say the numbers and the function row take the least amount of time to get used to. It’s the special characters that take time.

Can you use it with Vim?

I’m a huge fan of Vim, and I even wrote a book on the subject. In fact, I’m writing this very review in Vim.

And I must say, it’s difficult. My productivity took a hit. I use curly braces to move between paragraphs, I regularly search with

/,?, and*, move within a line with_and$, and use numbers in my commands likec2w(change two words) as well as other special characters, e.g.da"(delete around double quotes).The most difficult combination being spelling correction:

z=followed by a number to select the correct spelling. I consistency break the flow by having to pressZ <Fn1><Enter> <Fn1><Tab>or something similar to quickly fix a misspelling.My Vim productivity certainly took a massive hit. Yet, after a few days it’s starting to slowly climb back up, and I find myself remembering the right key combinations as the muscle memory kicks in.

I assume my Vim experience translates well into programming. Even though I write code for a living, I haven’t used Vortex Core to crank out code.

Speaking of programming

The whole keyboard is fully programmable (as long as you update it to the latest firmware).

It’s an easy process - a three page manual covers everything that’s needed like using different keyboard layers or remapping regular and function keys.

The manual also mentions using right

Win,Pn,Ctrl, andShiftkeys as arrow keys by hitting leftWin, leftAlt, and right spacebar. Vortex keyboards nowadays always come with this feature, but due to small form factor of the keys (especially Shift), impromptu arrow keys on Vortex Core are nearly indistinguishable from individual arrow keys.Remapping is helpful, since I’m used to having

CtrlwhereCaps Lockis (even though this means I have to hide Tab behind a function layer), or usinghjklas arrow keys (as opposed to the defaultijkl).It took me only a few minutes to adjust the keyboard to my needs, but I imagine I will come back for tweaks - I’m not so sure if I’ll be able to get used to special symbols hidden behind

Fn1+Shift+ key layer. Regularly pressing three keys at the time (with two of these keys being on the edge of the keyboard) feels unnatural and inconvenient right now. But I’m only a few hours in, and stenographers manager to do it.Living in the command line

The absence of certain special characters is especially felt when using the command line. Not having a forward slash available with a single keypress makes typing paths more difficult. I also use

Ctrl + \as a modifier key for tmux, and as you could imagine it’s just as problematic.Despite so many difficulties, I’m loving my time with Vortex Core! To be honest with myself, I don’t buy new keyboards to be productive, or increase my typing speed. I buy them because they look great and are fun to type on. And Vortex Core looks fantastic, and being able to cover most of the keyboard with both hands is amazing.

There’s just something special about having such a small board under my fingertips.

- Default layer (no modifiers):

-

How I use Vimwiki

I’ve been using Vimwiki for 5 years, on and off. There’s a multi year gap in between, some entries are back to back for months on end, while some notes are quarters apart.

Over those 5 years I’ve tried a few different lightweight personal wiki solutions, but kept coming back to Vimwiki due to my excessive familiarity with Vim and the simplicity of the underlying format (plain text FTW).

I used to store my Vimwiki in Dropbox, but after Dropbox imposed a three device free tier limit, I migrated to Google Drive for all my storage needs (and haven’t looked back!). I’m able to view my notes on any platform (including previewing the HTML pages on mobile).

I love seeing how other people organize their Wiki homepage, so it’s only fair to share mine:

I use Vimwiki as a combination of a knowledge repository and a daily project/work journal (

<Leader>wi). I love being able to interlink pages, and I find it extremely helpful to write entries journal-style, without having to think of a particular topic or a page to place my notes in.Whenever I have a specific topic in mind, I create a page for it, or contribute to an existing page. If I don’t - I create a diary entry (

<Leader>w<Leader>w), and move any developed topics into their own pages.I use folders (I keep wanting to call them namespaces) for disconnected topics which I don’t usually connect with the rest of the wiki: like video games, financial research, and so on. I’m not sure I’m getting enough value out of namespaces though, and I might revisit using those in the future: too many files in a single directory is not a problem since I don’t interract with the files directly.

Most importantly, every once in a while I go back and revisit the organizational structure of the wiki: move pages into folders where needed (

:VimwikiRenameLinkmakes this much less painful), add missing links for recently added but commonly mentioned topics (:VimwikiSearchhelps here), and generally tidy up.I use images liberally (

{{local:images/nyan.gif|Nyan.}}), and I occasionally access the HTML version of the wiki (generated by running:VimwikiAll2HTML).I’ve found useful to keep a running todo list with a set of things I need to accomplish for work or my projects, and I move those into corresponding diary pages once the tasks are ticked off.

At the end of each week I try to have a mini-retrospective to validate if my week was productive, and if there’s anything I can do to improve upon what I’m doing.

I also really like creating in-depth documentation on topics when researching something: the act of writing down and organizing information it helps me understand it better (that’s why, for instance, I have a beefy “financial/” folder, with a ton of research into somewhat dry, but important topics - portfolio rebalancing, health and auto insurance, home ownership, and so on).

Incoherent rambling aside, I’m hoping this post will spark some ideas about how to set up and use your own personal wiki.

-

Minimalist phone launcher

For the past few years I’ve been trying to focus on having more mindful experiences in my life. I find it rewarding to be present in the moment, without my thoughts rushing onto whatever awaits me next.

I present to you the biggest distraction: my phone.

I use to get in touch with people I love. I’m more productive at work because I have access to information on the go. I also use my phone to browse Reddit, YouTube, and every other media outlet imaginable. Even worse, sometimes I just waste time tinkering with the settings or mindlessly browsing through the apps I have installed.

It’s an attention sink.

Nearly a year ago as I was browsing the Google Play Store I bumped into a new launcher: KISS. The tag line caught my attention: “Keep It Simple, Stupid”. I went ahead and downloaded the launcher. I haven’t changed to another launcher since.

Here’s how my home screen looks today:

There’s nothing besides a single search bar. The search bar takes me to the apps I need, web searches I’m interested in, or people I’m trying to reach out to.

It’s simple to use. Start typing an app or a contact name, and the results show up above the search bar:

This simple concept has been responsible for cutting hours upon hours from my phone usage. Opening an app becomes a more deliberate experience, I open my phone with a purpose (granted this purpose might be to kill hours looking at cat videos). There’s no more scrolling through everything I have installed just to find something to stimulate my attention for a few more seconds.

You can download the KISS Launcher for Android from Google Play Store.

-

A year with Pebble Time Round

Disclaimer: This post was not endorsed by Pebble, nor I am affiliated with Pebble.

About a year ago I’ve tried out almost all wearables Pebble had to offer at a time - the original, Pebble Time, and my favorite - Pebble Time Round. I wouldn’t call myself a fanboy, but Pebble watches are pretty damn great.

Back then I was on a market for a smartwatch - something stylish, inexpensive, and durable, to show time and notifications. After some research I immediately ruled out all other wearables on the market: some were too much centered around fitness (not my market), and some tried to put a computer with a tiny screen on your hand (I’m looking at you, Apple and Google). I wasn’t interested in either, and I was charmed with simplicity of Pebble.

First, I’ve gotten the original Pebble. I was blown away by the battery life which neared two weeks, beautiful minimalist design, and the absence of touch screen. The last one is probably the main reason why I’m still using Pebble.

It’s a watch, it has a tiny screen the size of my thumb. How am I supposed to control it with gestures with 100% accuracy? Unlike with a phone, I interact with my watch often during more demanding activities - cycling, running, gym, meeting, etc. Having physical buttons doesn’t require me to look at a screen as I perform an action (quick glance to read a notification is enough - I can reply or dismiss without having to look at the watch again).

After using the original pebble for a few weeks I was curious to try out Pebble Time. I enjoyed having colorful display, the battery life was almost as long, and the watch felt smaller than the original one. It was a decent choice, but still couldn’t help but feel like a square digital watch doesn’t fit my style.

That’s when I decided to try out Pebble Time Round. It’s the smallest of the three, and definitely one of the thinnest smartwatches available (at only 7.5 mm). I went for a silver model with a 14 mm strap. Initially there was a lack of affordable straps, but after some time GadgetWraps filled in that niche.

It’s been a year now, and it’s still going strong. Pebble Time Round (or PTR as people call it) doesn’t have the longest battery life, averaging at about 2 days until hitting the low power mode (when Pebble watches run low on juice they only display time). I usually charge it daily, since charging 56 mAh battery doesn’t take long (it gets a full day of use from 15 a minute charge).

PTR is much more a watch than anything else: it looks good, and it shows time. All the necessary things are available at a glance - calendar, notifications, texts, weather, music controls, timers and alarms. I use voice dictation to send out an occasional text.

I work in a corporate setting, with sometimes difficult to manage number of meetings, constant Hangouts pings, and a stream of emails. Pebble helps me easily navigate hectic daily routine without having to pull up my phone or my laptop to look up the next conference room, meeting attendees, or reply to a quick ping.

Due to app marketplace similar to Google Play Store (with most if not all the apps and watchfaces free) I find it easy to customize Pebble based on a situation I’m in. I’m traveling and need to be able to pull up my flight? Check. Need to call an Uber from my wrist? Check. Get walking directions? Check.

To my delight Pebble as a platform is rather close to Linux ideology. Pebble apps are modular and tend to focus on one thing and do one thing well.

Recently a Kickstarter for Pebble 2 has been announced. It’s rather unfortunate PTR is not getting an updated version, but to be honest it doesn’t really need to. It’s a fantastic combination of hardware and software which fills in a specific niche: a stylish smartwatch for displaying relevant chunks of information.